Journal of Law, Information and Science

|

Home

| Databases

| WorldLII

| Search

| Feedback

Journal of Law, Information and Science |

|

Privacy and Social Media: An Analytical Framework

ROGER CLARKE[*]

Social media services offer users tools for interaction, publishing and sharing, but in return demand exposure of users' selves and of personal information about the members of their social networks. The Terms of Service imposed by providers are uniformly privacy-hostile. The practice of social media exhibits a great many distrust influencers, and some are sufficiently strong that they constitute distrust drivers. This paper presents an analytical framework whereby designers of social media services can overcome user distrust and inculcate user trust.

Social media is a collective term for a range of services that support users in exchanging content and pointers to content, but in ways that are advantageous to the service-provider. These services emerged in conjunction with the ‘Web 2.0’ and ‘social networking’ notions, during 2004-05.[1]

As shown by Best[2] and Clarke,[3] there was little terminological clarity or coherence about ‘Web 2.0’. Similarly, an understanding of what social media encompasses remains somewhat rubbery, eg Kaplan and Haenlein define social media as ‘a group of Internet-based applications that build on the ideological and technological foundations of Web 2.0, and that allow the creation and exchange of User Generated Content’.[4] Those authors did, however, apply theories in the field of media research (social presence, media richness) and social processes (self-presentation, self-disclosure), in order to propose the classification scheme in Table 1.

Social network analysis is a well-established approach to modelling actors and the ‘ties’ or linkages among them. It generally emphasises the importance of the linkages, and underplays or even ignores the attributes of the actors and other aspects of the context within which each actor operates.[5] Various forms of graphing and inferencing techniques have been harnessed by social media service-providers. In a social media context, networks may be based on explicit linkages such as bookmarking, ‘friending’, following, ‘liking’/‘+1’ and endorsing, on communications linkages such as email and chat messages, or on implicit linkages such as tagging, visiting the same sites, purchasing the same books, etc.

|

|

Social presence/Media richness

|

|||

|

Low

|

Medium

|

High

|

||

|

Self-presentation/Self-disclosure

|

High

|

Blogs

|

Social networking sites (eg Facebook)

|

Virtual social worlds (eg Second Life)

|

|

Low

|

Collaborative projects

(eg Wikipedia)

|

Content Communities

(eg YouTube)

|

Virtual game worlds (eg World of Warcraft)

|

|

Table 1: Kaplan and Haenlein’s Classification of Social Media

The motivation for social media services-providers is to attract greater traffic on their sites. They therefore actively seek network effects[6] based on positive feedback loops, preferably of the extreme variety referred to as ‘bandwagon effects’. These depend on the generation of excitement, and the promotion of activities of individuals to large numbers of other individuals, based on both established linkages and inferred affinities. An important element of commercially successful social media is the encouragement of exhibitionism by a critical mass of users, in order to stimulate voyeuristic behaviour (in the sense of gratification through observation) by many users. For the provider, exhibitionism of self is useful, but the exposure of others is arguably even more valuable.[7]

Another insight from social network theory is that links among content-items are important to providers not so much because they provide a customer service, but because they support social linkages.[8] The major social media services place much less emphasis on enabling close management of groups such as ‘cliques’ (tightly-knit groups) and ‘social circles’ (looser groups), because activity within small sub-nets serves the provider’s interests far less well than open activity. This has significant negative implications for the privacy of the many individuals who participate in social circles or cliques whose norm is to respect the confidentiality of transactions occurring within those groups.

Consumer marketing companies generally perceive the need to convey corporate image and messages, shape consumer opinions, and build purchase momentum; and social media has been harnessed to those purposes. The Kaplan and Haenlein classification scheme is a good fit to the perspectives of both service-providers and consumer-facing corporations. On the other hand, through its commitment to the ‘consumer as prey’ tradition,[9] the scheme fails to reflect the interests of the users who social media services exploit. A classification scheme would better serve users of social media if it focussed on its features and affordances in the areas of human interaction, content broadcast and content collaboration.[10]

Social media services offer varying mixes of features. These can be classified as:[11]

• interaction tools (eg email, chat/IM, SMS, voice and video-conferencing);

• broadcast tools (eg web-pages and their closed equivalent of ‘wall-postings’, blogs, micro-blogs, content communities for images, videos and slide-sets, and locations); and

• sharing tools (eg wikis, social bookmarking, approvals and disapprovals, social gaming).

A review of social media services since 2000 identifies waves that have been centred on, successively, blogs (eg Wordpress, Blogspot, LiveJournal), voice-calls (Skype), social gaming (Friendster, Zynga), virtual worlds (Second Life), social bookmarking (Delicious), approvals (Digg, Reddit), video communities (YouTube, Vine), image communities (Flickr, Picasa, Instagram, Pinterest), social networking (Plaxo, LinkedIn, Xing, Orkut, Facebook, Buzz, Google+), micro-blogs (Twitter, Tumblr), location (Foursquare, Latitude) and back to messaging (WhatsApp, Snapchat). Some social media services have proven to be short-lived fads. Some appear to be instances of longer-lived genres, although, even in these cases, waves of differently-conceived services have been crashing over one another in quick succession. Some aspects may mature into long-term features of future services, because they satisfy a deeper human need rather than just a fashion-driven desire.

Consumers’ hedonistic desires may be well-served by contemporary social media services. On the other hand, a proportion of users understand that they are being exploited by social media service-providers. The boldness and even arrogance of many of those providers has given rise to a growing body of utterances by influential commentators, which has caused a lot more users to become aware of the extent of the exploitation.[12] Consumer and privacy concerns are legion. Combined with other factors, these concerns are giving rise to doubts about whether sufficient trust exists for the first decade’s momentum in social media usage to be sustained.[13]

Privacy has long loomed as a strategic factor for both corporations and government agencies.[14] Despite this, social media services that are committed to privacy-friendliness have been conspicuous by their absence. Attempts at consumer-oriented social media, such as Diaspora, duuit, Gnu social and Open Social, have all faltered. Snapchat nominally supports ephemeral (‘view once’) messaging, but it has been subject to a formal complaint that its published claims are constructively misleading.[15]

I have suggested elsewhere that the long wait for the emergence of the ‘prosumer’ may be coming to a close.[16] That term was coined by Toffler in 1980, to refer to a phenomenon he had presaged 10 years earlier.[17] The concept was revisited in Tapscott and Williams,[18] applied in Brown and Marsden,[19] and has been extended to the notion of ‘produser’ in Bruns.[20] A prosumer is a consumer who is proactive (eg is demanding, and expects interactivity with the producer) and/or a producer as well as a consumer (eg one who expects to be able to exploit digital content for mashups). To the extent that a sufficient proportion of consumers do indeed mature into prosumers, consumer dissatisfaction with untrustworthy social media service-providers can be expected to rise, and to influence consumers’ choices.

The need therefore exists for means whereby the most serious privacy problems can be identified, and ways can be devised to overcome them. This paper commences with a review of the nature of privacy, followed by application of the core ideas to social media. The notion of trust is then considered, and operational definitions are proposed for a family of concepts. Implications for social media design are drawn from that analytical framework, including constructive proposals for addressing privacy problems to the benefit of consumers and service-providers alike. Research opportunities arising from the analysis are identified.

This section commences by summarising key aspects of privacy. It then applies them to social media.

1.1 Privacy

Privacy is a multi-dimensional construct rather than a concept, and hence definitions are inevitably contentious. Many of the conventional approaches are unrealistic, serve the needs of powerful organisations rather than those of individuals, or are of limited value as a means of guiding operational decisions. Other treatments include those of Schoeman,[21] Hirshleifer,[22] Lindsay[23] and Nissenbaum.[24]

The approach adopted here is to adopt a definition of privacy as an interest, to retain its positioning as a human right, to reject the attempts on behalf of business interests to reduce it to a mere economic right,[25] and to supplement the basic definition with a discussion of the multiple dimensions inherent in the construct.

The following definition is of long standing:[26]

Privacy is the interest that individuals have in sustaining a ‘personal space’, free from interference by other people and organisations.

A weakness in discussions of privacy throughout the world is the limitation of the scope to data protection/Datenschutz. It is vital to recognise that privacy is a far broader notion than that. The four-dimensional scheme below has been in consistent use since at least 1996,[27] and a fifth dimension is tentatively added at the end of the discussion.

(1) Privacy of the person

This is concerned with the integrity of the individual’s body. At its broadest, it extends to freedom from torture and the right to medical treatment. Issues include compulsory immunisation, imposed treatments such as lobotomy and sterilisation, blood transfusion without consent, compulsory provision of samples of body fluids and body tissue, requirements for submission to biometric measurement, and, significantly for social media, risks to personal safety arising from physical, communications or data surveillance, or location and tracking.[28]

(2) Privacy of personal behaviour

This is the interest that individuals have in being free to conduct themselves as they see fit, without unjustified surveillance. Particular concern arises in relation to sensitive matters such as sexual preferences and habits, religious practices, and political activities. Some privacy analyses, particularly in Europe, extend this discussion to personal autonomy, liberty and the right to self-determination.

Intrusions into this dimension of privacy have a chilling effect on social, economic and political behaviour. The notion of ‘private space’ is vital to all aspects of behaviour and acts. It is relevant in ‘private places’ such as the home and toilet cubicles, and in ‘public places’, where casual observation by the few people in the vicinity is very different from systematic observation and recording. The recent transfer of many behaviours from physical to electronic spaces has enabled marked increases in behavioural surveillance and consequential threats to privacy, because:

• previously localised human actions may now be observed by others who are not in the vicinity, and whose ability to observe may not be known to the actor(s);

• previously ephemeral human actions have been subjected to sound, image, video and electronic recording, and hence may be observed at a later time;

• previously ephemeral human actions may be re-discovered and re-cycled, on multiple future occasions; and

• social networks that were not previously detectable without intense physical surveillance have become inferrable from electronic traffic.

(3) Privacy of personal communications

Individuals want, and need, the freedom to communicate among themselves, using various media, without routine monitoring of their communications by other persons or organisations. Issues include mail ‘covers’, use of directional microphones and ‘bugs’ with or without recording apparatus, and telephonic interception. The recent transfer of many behaviours from physical to electronic spaces has enabled marked increases in communications surveillance and consequential threats to privacy. This is because:

• previously ephemeral human communication acts have been converted into machine-read form, and may be at least temporarily stored, particularly in the case of email, chat and SMS, but also VOIP;

• third parties have contrived to gain access to machine-read communications in transit and in storage, in ways that were previously precluded by longstanding laws protecting individuals against undue intrusions by governments and corporations. This has led to lively battles over ‘data retention’ initiatives; and

• what was once disparaged as conspiracy theory has now been widely accepted as fact: national security agencies of the USA, and of other hitherto relatively free nations, routinely access personal electronic communications, without due cause, and in many cases with dubious legality, without legal authority, or even in outright breach of the law.[29]

(4) Privacy of personal data

This is referred to variously as ‘data privacy’ and ‘information privacy’, and regulatory measures are referred to as ‘data protection’. Individuals claim that data about themselves should not be automatically available to other individuals and organisations, and that, even where data is possessed by another party, the individual must be able to exercise a substantial degree of control over that data and its use. Many analyses focus on this dimension almost to the exclusion of the others.[30]

Recent developments have substantially altered longstanding balances, and created new risks, in particular:

• the capture of sensitive data that was never previously recorded. This includes network location, micro-purchases, net-based purchases, search-terms, visits to sites and accesses to content, plus geo-location, and trails of locations enabling both retrospective and real-time tracking;

• the consolidation of many items of personal data, from many sources, and its exploitation by governments and corporations through data mining and ‘big data’ techniques;[31]

• use, retention and disclosure of the aggregations of personal data, because of the self-interest and overwhelming market power of the small number of service-providers;

• accessibility of the aggregations of sensitive personal data by government agencies, in many cases without conventional constraints such as judicial warrants, because of the failure of parliaments to adapt longstanding protections to the digital age, and the granting of large numbers of excessive powers to government agencies under the pretext that the powers are necessary as ‘counter-terrorism’ measures.

Underpinning the dramatic escalation of privacy threats since about 1995 has been greatly intensified opposition by organisations to anonymity and even pseudonymity, and greatly increased demands for all acts by all individuals to be associated with the individual’s ‘real identity’.[32] As a result of decreased anonymity, content digitisation, cloud services, government intrusions and copyright-protection powers, consideration now needs to be given to adding one or more further dimensions.[33] The following is a likely candidate fifth dimension:

(5) Privacy of personal experience

Individuals reasonably feel threatened by surveillance of their reading and viewing, inter-personal communications and electronic social networks, and of their physical meetings and electronic associations with other people. A significant industry has developed that infers individuals’ interests and attitudes from personal data mined from a wide array of sources.

The recording of previously private library-search transactions (through web search-engine logs), book-purchases (through eCommerce logs) and reading activities (through eBook logs and licensing databases) is striking far more deeply inside the individual’s psyche than ever before, enabling much more reliable inferencing about the person’s interests, formative influences and attitudes. Concerns have been evident for many years, in the form of arguments for ‘a right to read anonymously’,[34] and a broader ‘right to experience intellectual works in private — free from surveillance’.[35] The diversity and intensity of threats arguably makes it now necessary to recognise the cluster of issues as constituting a fifth dimension of privacy.

The increased concerns evident in the European Union in relation to US corporate and government abuses of the personal data of Europeans[36] appears to embody recognition of not only the privacy of personal communications, data and behaviour, but to some extent also of the privacy of personal experience.

1.2 Privacy applied to social media

At an early stage, commentators identified substantial privacy threats inherent in Web 2.0, social networking services and social media generally.[37] Although privacy threats arise in relation to all categories of social media, social networking services (SNS) are particularly rich both in inherent risks and in aggressive behaviour by service-providers. This section accordingly pays particular attention to SNS.

One of the earliest SNS, Plaxo, was subjected to criticism at the time of its launch.[38] Google had two failures — Orkut and Buzz — before achieving moderate market penetration with Google+. All three have been roundly criticised for their serious hostility to the privacy not only of their users but also of people exposed by their users.[39] Subsequently, Google’s lawyers have argued that users of Gmail, and by implication of all other Google services ‘have no legitimate expectation of privacy’.[40]

However, it is difficult not to focus on Facebook, not so much because it has dominated many national markets for SNSs for several years, but rather because it has done so much to test the boundaries of privacy abuse. Summaries of its behaviour can be found in Bankston,[41] Opsahl,[42] The New York Times,[43] McKeon,[44] boyd and Hargittai[45] and the British Broadcasting Corporation (BBC).[46] Results of a survey are reported in Lankton and McKnight.[47]

After five years of bad behaviour by Facebook, Opsahl summarised the situation as follows:

When [Facebook] started, it was a private space for communication with a group of your choice. Soon, it transformed into a platform where much of your information is public by default. Today, it has become a platform where you have no choice but to make certain information public, and this public information may be shared by Facebook with its partner websites and used to target ads.[48]

The widespread publication of several epithets allegedly uttered by Facebook’s CEO Mark Zuckerberg, have reinforced the impression of exploitation, particularly ‘[w]e are building toward a web where the default is social’[49] and ‘[t]hey “trust me”. Dumb f..ks’.[50] These were exacerbated by the hypocrisy of Zuckerberg’s marketing executive and sister in relation to the re-posting of a photograph of her on Twitter, documented in Adams.[51] Some of the most memorable quotations in relation to privacy and social media have been self-serving statements by executives in the business, such as Scott McNealy’s ‘[y]ou have zero privacy anyway. Get over it’[52] and Eric Schmidt’s ‘[i]f you have something that you don’t want [Google and its customers] to know, maybe you shouldn’t be doing it in the first place’.[53]

The conclusion reached in 2012 by a proponent of social media was damning:

[The social networking services] makes profit primarily by using heretofore private information it has collected about you to target advertising. And Zuckerberg has repeatedly made sudden, sometimes ill conceived and often poorly communicated policy changes that resulted in once-private personal information becoming instantly and publicly accessible. As a result, once-latent concerns over privacy, power and profit have bubbled up and led both domestic and international regulatory agencies to scrutinize the company more closely ... The high-handed manner in which members’ personal information has been treated, the lack of consultation or even communication with them beforehand, Facebook’s growing domination of the entire social networking sphere, Zuckerberg’s constant and very public declarations of the death of privacy and his seeming imposition of new social norms all feed growing fears that he and Facebook itself simply can not be trusted.[54]

Social media features can be divided into three broad categories. In the case of human interaction tools, users generally assume that conversations are private, and in some cases are subject to various forms of non-disclosure conventions. However, social media service-providers sit between the conversation-partners and in almost all cases store the conversation — thereby converting ephemeral communications into archived statements — and give themselves the right to exploit the contents. Moreover, service-providers strive to keep the messaging flows internal, and hence exploitable by, and only by, that company, rather than enabling their users to take advantage of external and standards-based messaging services such as email.

The second category of social media features, content broadcast, by its nature involves publication. Privacy concerns still arise, however, in several ways. SNS providers, in seeking to capture ‘wall-postings’, encourage, and in some cases even enforce, self-exposure of profile data. A further issue is that service-providers may intrude into users’ personal space by monitoring content with a view to punishing or discriminating against individuals who receive particular broadcasts, eg because the content is in breach of criminal or civil law, is against the interests of the service-provider, or is deemed by the service-provider to be in breach of good taste. The primary concern, however, is that the individual who initiates the broadcast may not be able to protect their identity. This is important where the views may be unpopular with some organisations or individuals, particularly where those organisations or individuals may represent a threat to the person’s safety. It is vital to society that ‘whistleblowing’ be possible. There are contrary public interests, in particular that individuals who commit serious breaches such as unjustified disclosure of sensitive information, intentionally harmful misrepresentation, and incitement to violence, be able to be held accountable for their actions. That justifies the establishment of carefully constructed forms of strong pseudonymity; but it does not justify an infrastructure that imposes on all users the requirement to disclose, and perhaps openly publish, their ‘real identity’.

The third category of social media features, content collaboration, overlaps with broadcasting, but is oriented towards shared or multi-sourced content rather than sole-sourced content. SNS providers exploit the efforts of content communities — an approach usefully referred to as ‘effort syndication’.[55] This gives rise to a range of privacy issues. Even the least content-rich form, indicator-sharing, may generate privacy risk for individuals, such as the casting of a vote in a particular direction on some topic that attracts opprobrium (eg paedophilia, racism or the holocaust, but also criticisms of a repressive regime).

The current, somewhat chaotic state of social media services involves significant harm to individuals’ privacy, which is likely to progressively undermine suppliers’ business models. Constructive approaches are needed to address the problems.

This section proposes a basis for a sufficiently deep understanding of the privacy aspects of social media, structured in a way that guides design decisions. It first reviews the notion of trust. In both the academic and business literatures, the focus has been almost entirely on the positive notion of trust, frequently to the complete exclusion of the negative notion of distrust. It is argued here that both concepts need to be understood and addressed. A framework and definitions are proposed that enable the varying impacts of trust-relevant factors to be recognised and evaluated.

2.1 Concepts

Trust originates in family and social settings, and is associated with cultural affinities and inter-dependencies. This paper is concerned with factors that relate to the trustworthiness or otherwise of other parties to a social or economic transaction. Trust in another party is associated with a state of willingness to expose oneself to risks.[56] Rather than treating trust as ‘expectation of the persistence and fulfilment of the natural and the moral orders’,[57] Yamagishi distinguishes ‘trust as expectations of competence’ and ‘trust as expectations of intention’.[58] The following is proposed as an operational definition relevant to the contexts addressed in this paper:[59]

Trust is confident reliance by one party on the behaviour of one or more other parties.

The importance of trust varies a great deal, depending on the context. Key factors include the extent of the risk exposure, the elapsed time during which the exposure exists, and whether insurance is available, affordable and effective. Trust is most critical where a party has little knowledge of the other party, or far less power than the other party.

The trust concept has been applied outside its original social setting. Of relevance to the present paper, it is much-used in economic contexts: ‘From a rational perspective, trust is a calculation of the likelihood of future cooperation’.[60] This paper is concerned with the trustworthiness or otherwise of a party to a transaction, rather than, for example, of the quality of a tradeable item, of its fit to the consumer’s need, of the delivery process, or of the infrastructure and institutions on which the conduct of the transaction depends. The determinants of trust have attracted considerable attention during the two decades after 1994, as providers of web-commerce services endeavoured to overcome impediments to the adoption of electronic transactions between businesses and consumers, popularly referred to as B2C eCommerce. The B2C eCommerce literature contains a great many papers that refer to trust, and that investigate very specific aspects of it.[61]

It is feasible for cultural affinities to be achieved in some B2C contexts. For example, consumers’ dealings with cooperatives, such as credit unions, may achieve this, because ‘they are us’. On the other hand, it is infeasible for for-profit corporations to achieve anything more than an ersatz form of trust with their customers, because corporations law demands that priority be given to the interests of the corporation above all other interests.

I have previously analysed the bases on which a proxy for positive trust can be established in B2C eCommerce were analysed in Clarke.[62] Trust may arise from a direct relationship between the parties (such as a contract, or prior transactions); or from experience (such as a prior transaction, a trial transaction, or vicarious experience). When such relatively strong sources of trust are not available, it may be necessary to rely on ‘referred trust’, such as delegated contractual arrangements, ‘word-of-mouth’, or indicators of reputation. Ignoring the question of preparedness to transact, empirical evidence from studies of eBay transactions suggests that ‘the quality of a seller’s reputation has a consistent, statistically significant, and positive impact on the price of the good’.[63] The fact that reputation’s impact on price ‘tends to be small’[64] is consistent with the suggestion that reputation derived from reports by persons unknown is of modest rather than major importance.

Mere brand names are a synthetic and ineffective basis for trust. The weakest of all forms is meta-brands, such as ‘seals of approval’ signifying some form of accreditation by organisations that are no better-known than the company that they purport to be attesting to.[65] The organisations that operate meta-brands generally protect their own interests with considerably more vigour than they do the interests of consumers.

Where the market power of the parties to a transaction is reasonably balanced, the interests of both parties may be reflected in the terms of the contracts that they enter into. This is seldom the case with B2C commerce generally, however, nor with B2C eCommerce in particular. It is theoretically feasible for consumers to achieve sufficient market power to ensure balanced contract terms, in particular through amalgamation of their individual buying-power, or through consumer rights legislation. In practice, however, an effective balance of power seldom arises.

Many recent treatments of trust in B2C eCommerce adopt the postulates of Mayer, Davis and Schoorman,[66] to the effect that the main attributes underlying a party’s trustworthiness are ability, integrity and benevolence — to which website quality has subsequently been added. See for example Lee and Turban[67] and Salo and Karjaluoto.[68] However, it appears unlikely that a body of theory based on the assumption of altruistic behaviour by for-profit organisations is capable of delivering much of value. In practice, consumer marketing corporations seek to achieve trust by contriving the appearance of cultural affinities. One such means is the offering of economic benefits to frequent buyers, but projecting the benefits using not an economic term but rather one that invokes social factors: ‘loyalty programs’. Another approach is to use advertising, advertorials and promotional activities to project socially positive imagery onto a brand name and logo. To be relevant to the practice of social media, research needs to be based on models that reflect realities, rather than embody imaginary constructs such as ‘corporate benevolence’.

During the twentieth century’s ‘mass marketing’ and ‘direct marketing’ eras, consumer marketing corporations were well-served by the conceptualisation of consumer as prey. That stance continued to be applied when B2C eCommerce emerged during the second half of the 1990s. That the old attitude fitted poorly to the new context was evidenced by the succession of failed initiatives documented by Clarke.[69] These included billboards on the information superhighway (1994-95), closed electronic communities (1995-97), push technologies (1996-98), spam (1996-), infomediaries (1996-99), portals (1998-2000) and surreptitious data capture (1999-). Habits die hard, however, and to a considerable extent consumers are still being treated as quarry by consumer marketers. It is therefore necessary to have terms that refer to the opposite of trust.

The convention has been to assume that trust is either present, or it is not, and hence:

Lack of Trust is the absence, or inadequacy, of confidence by one party in the reliability of the behaviour of one or more other parties.

This notion alone is not sufficient, however, because it fails to account for circumstances in which, rather than there being either a positive feeling or an absence of it, there is instead a negative element present, which represents a positive impediment rather than merely the lack of a positive motivator to transact. The concept of ‘negative ratings’[70] falls short of that need. This author accordingly proposes the following additional term and definition:

Distrust is the active belief by one party that the behaviour of one or more other parties is not reliable.

There are few treatments of distrust in the B2C eCommerce literature, but see McKnight and Chervany,[71] and the notion of ‘trustbuster’ in Riegelsberger and Sasse.[72]

One further concept is needed, in order to account for the exercise of market power in B2C eCommerce. Most such business is subject to Terms of Service imposed by merchants. These embody considerable advantages to the companies and few for consumers. The terms imposed by social media companies are among the most extreme seen in B2C eCommerce.[73] In such circumstances, there is no trust grounded in cultural affinity. The consumer may have a choice of merchants, but the terms that the merchants impose are uniformly consumer-hostile. Hence, a separate term is proposed, to refer to a degraded form of trust:

Forced Trust is hope held by one party that the behaviour of one or more other parties will be reliable, despite the absence of important trust factors.

2.2 Categories of trust factor

The concepts presented in the previous sub-section provide a basis for developing insights into privacy problems and their solutions. In order to operationalise the concepts, however, it is necessary to distinguish several different categories of trust factor.

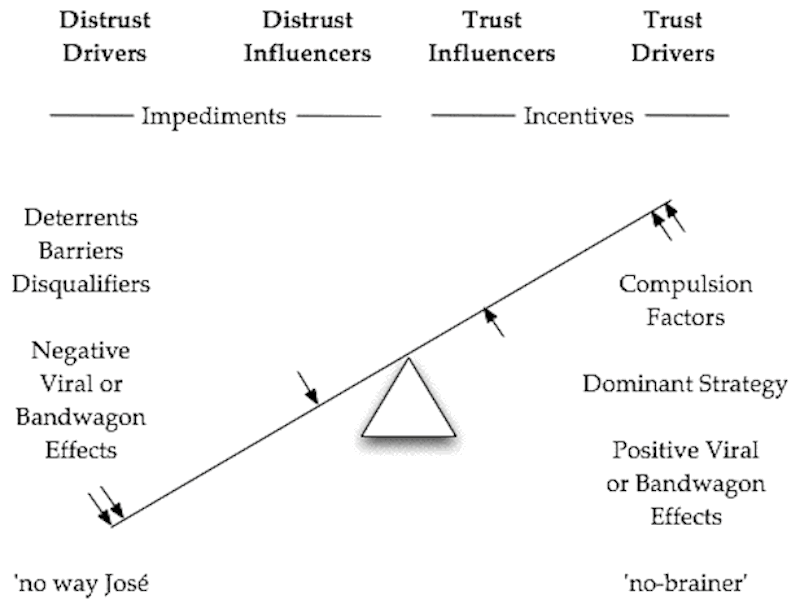

A Driver is a factor that, alone, is sufficient to determine an adoption/non-adoption decision. This is distinct from the ‘straw that broke the camel’s back’ phenomenon. In that case, the most recent Influencer causes a threshold to be reached, but only by adding its weight to other, pre-existing Influencers.

The definitions in Figure 1 use the generic terms ‘party’ and ‘conduct of a transaction’, in order to provide broad scope. They encompass both social and economic transactions, and both natural persons as parties — variously in social roles, as consumers, as prosumers, and as producers — and legal persons, including social not-for-profit associations, economic not-for-profits such as charities, for-profit corporations, and government agencies.

A Trust Influencer is a factor that has a positive influence on the likelihood of a party conducting a transaction

A Distrust Influencer is a factor that has a negative influence on the likelihood of a party conducting a transaction

A Trust Driver is a factor that has such a strong positive influence on the likelihood of a party conducting a transaction that it determines the outcome

A Distrust Driver is a factor that has such a strong negative influence on the likelihood of a party conducting a transaction that it determines the outcome

Figure 1: The Four Categories of Trust Factor

In Figure 2, the relationships among the concepts are depicted in the mnemonic form of a see-saw, and are supplemented by commonly used terms associated with each category.

Figure 2: A Depiction of the Categories of Trust Factor

Some trust factors are applicable to consumers generally, and hence the distinctions can be applied to an aggregate analysis of the market for a particular tradeable item or class of items. Attitudes to many factors vary among consumers, however; and hence the framework can be usefully applied at the most granular level, that is, to individual decisions by individual consumers. For many purposes, an effective compromise is likely to be achieved by identifying consumer segments, and conducting analyses from the perspective of each segment. Hence, a driver is likely to be described in conditional terms, such as ‘for consumers who are risk-averse...’, ‘for consumers who live outside major population centres...’, and ‘for consumers who are seeking a long-term service...’.

The commercial aspects of the relationship between merchant and consumer offer many examples of each category of trust factor. Distrust Drivers include proven incapacity of the merchant to deliver, such as insolvency. On the other hand, low cost combined with functional superiority represents a Trust Driver. Non-return policies are usually a Distrust Influencer rather than a Distrust Driver, whereas ‘return within 7 days for a full refund’ is likely to be a Trust Influencer. Merchants naturally play on human frailties in an endeavour to convert Influencers into Drivers, through such devices as ‘50% discount, for today only’.

Beyond commercial aspects, other clusters of trust factors include the quality, reliability and safety of the tradeable item, its fit to the consumer’s circumstances and needs, and privacy. This paper is concerned with the last of these.

The analytical framework introduced in the previous section provides a basis for improving the design of social media services in order to address the privacy concerns of consumers and the consequential privacy risks of service-providers.

3.1 The design of social media services

The categorisation of trust factors provides designers with a means of focussing on the aspects that matter most. Hedonism and fashion represent drivers for the adoption and use of services. The first priority therefore is to identify and address the Distrust Drivers that undermine adoption of the provider’s services.

Some privacy factors will have a significant negative impact on all or most users. Many, however, will be relevant only to particular customer-segments, or will only be relevant to particular users in particular circumstances or for a particular period of time. An important example of a special segment is people whose safety is likely to be threatened by exposure, perhaps of their identity, their location, or sensitive information about them.[74] Another important segment is people who place very high value on their privacy, preferring (for any of a variety of reasons) to stay well out of the public eye.

A detailed analysis of specific privacy features is in a companion paper.[75] A couple of key examples of features that may be Distrust Drivers are reliance on what was referred to in the above framework as ‘forced trust’, requirements that the user declare their commonly-used identity or so-called 'real name', and default disclosure of the user’s geo-location to the service-provider.

The distrust analysis needs to extend to factors that are influencers rather than drivers, because moderate numbers of negative factors, particularly if they frequently rise into a user’s consciousness, are likely to morph into an aura of untrustworthiness, and thereby cause the relationship with the user to be fragile rather than loyal.

Examples of active measures that can be used to negate distrust include transparency in relation to Terms of Service,[76] the conduct of a privacy impact assessment, including focus groups and consultations with advocacy organisations,[77] mitigation measures where privacy risks arise, prepared countermeasures where actual privacy harm arises and prepared responses to enquiries and accusations about privacy harm.

Although overcoming distrust offers the greater payback to service-providers, they may benefit from attention to Trust Drivers and Trust Influencers as well. This will also be in many cases to the benefit of users. Factors of relevance include: privacy-settings that are comprehensive, clear and stable; conservative defaults; means for users to manage their profile-data; consent-based rather than unilateral changes to Terms of Service; express support for pseudonymity and multiple identifiers; and inbuilt support and guidance in relation to proxy-servers and other forms of obfuscation. Some users will be attracted by the use of peer-to-peer (P2P) architecture with dispersed storage and transmission paths rather than the centralisation of power that is inherent in client-server architecture.

3.2 Research opportunities

The copious media coverage of privacy issues in the social media services marketplace provides considerable raw material for research.[78] A formal research literature is only now emergent, and opportunities exist for significant contributions to be made.

The analysis conducted in this paper readily gives rise to a number of research questions. For example:

• which factors are drivers and which are merely influencers?

• does their role depend on contextual factors, and if so what are they?

• what are the trade-offs among influencers?

• how much do people sell privacy for?

• how much do people pay to buy privacy?

• to what extent do personal and contextual factors determine drivers?

• to what extent do personal and contextual factors affect trade-offs?

An example of the kinds of trade-off research that would pay dividends is provided by Kaplan and Haenlein:

In December 2008, the fast food giant [Burger King] developed a Facebook application which gave users a free Whopper sandwich for every 10 friends they deleted from their Facebook network. The campaign was adopted by over 20,000 users, resulting in the sacrificing of 233,906 friends in exchange for free burgers. Only one month later, in January 2009, Facebook shut down Whopper Sacrifice, citing privacy concerns. Who would have thought that the price of a friendship is less than $2 a dozen?[79]

The vignette suggests that the presumption that people sell their privacy very cheaply may have a corollary — that people’s privacy can be bought very cheaply as well.

A wide range of research techniques can be applied to such studies.[80] Surveys deliver ‘convenience data’ of limited relevance, testing what people say they do, or would do — and all too often merely what they say they think. Other techniques hold much more promise as a means of addressing the research questions identified above, in particular field studies of actual human behaviour, laboratory experiments involving actual human behaviour in controlled environments, demonstrators, and open-source code to implement services or features.

Privacy concerns about social media services vary among user segments, and over time. Some categories of people, some of the time, are subject to serious safety risks as a result of self-exposure and exposure by others; many people, a great deal of the time, are subject to privacy harm; and some people simply dislike being forced to be open and exposed, and much prefer to lead a closed life. From time to time, negative public reactions and media coverage have affected many social media providers, including Facebook, Google and Instagram. There are signs that the occurrences are becoming more frequent and more intensive.

The analytical framework presented in this paper offers a means whereby designers can identify aspects of their services that need attention, either to prevent serious harm to their business or to increase the attractiveness of their services to their target markets. Researchers can also apply the framework in order to gain insights into the significance of the various forms of privacy concern.

[*] Principal, Xamax Consultancy Pty Ltd, Canberra; Visiting Professor, UNSW Law, University of NSW, Sydney; Visiting Professor, Research School of Computer Science, ANU, Canberra.

[1] T O’Reilly, ‘What Is Web 2.0? Design Patterns and Business Models for the Next Generation of Software’ on O’Reilly, (30 September 2005)

<http://www.oreillynet.com/lpt/a/6228> .

[2] D Best, Web 2.0: Next Big Thing or Next Big Internet Bubble? (January, 2006) Lecture Web Information Systems, Technische Universiteit Eindhoven,

<http://www.scribd.com/doc/4635236/Web-2-0> .

[3] R Clarke, ‘Web 2.0 as Syndication’ (August 2008) 3(2) Journal of Theoretical and Applied Electronic Commerce Research 30

<http://www.jtaer.com/portada.php?agno=2008 & numero=2#> .

Preprint at <http://www.rogerclarke.com/EC/Web2C.html> .

[4] A M Kaplan and M Haenlein, ‘Users of the world, unite! The challenges and opportunities of social media’ (Jan-Feb 2010) 53(1) Business Horizons 59, 61. DOI: http://dx.doi.org/10.1016/j.bushor.2009.09.003.

[5] E Otte and R Rousseau, ‘Social network analysis: a powerful strategy, also for the information sciences’ (2002) 28(6) Journal of Information Science 441 <http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.95.3227 & rep=rep1 & type=pdf> . Doi: 10.1177/016555150202800601.

[6] M L Katz and C Shapiro, ‘Systems Competition and Network Effects’ (Spring 1994) 8(2) Journal of Economic Perspectives 93 <http://brousseau.info/pdf/cours/Katz-Shapiro%5B1994%5D.pdf> .

[7] A A Adams, ‘Facebook Code: SNS Platform Affordances and Privacy’ (2014) 23(1) Journal of Law, Information and Science.

[8] J Hendler and J Golbeck, ‘Metcalfe’s law, Web 2.0, and the Semantic Web’ (2008) 6(1) Web Semantics: Science, Services and Agents on the World Wide Web 14 <http://smtp.websemanticsjournal.org/index.php/ps/article/download/130/128> . Doi: http://dx.doi.org/10.1016/j.websem.2007.11.008.

[9] R Clarke, ‘Trust in the Context of e-Business’ (February 2002) 4(5) Internet Law Bulletin 56 <http://www.rogerclarke.com/EC/Trust.html; R Clarke, ‘B2C Distrust Factors in the Prosumer Era’ (keynote paper presented at CollECTeR Iberoamerica, Madrid, 25-28 June 2008) 1-12,

<http://www.rogerclarke.com/EC/Collecter08.html> J Deighton J and L

Kornfeld, ‘Interactivity’s Unanticipated Consequences for Marketers and Marketing’ (2009) 23(1) Journal of Interactive Marketing 4.

[10] R Clarke, Consumer-Oriented Social Media: The Identification of Key Characteristics (January 2013) Xamax Consultancy,

<http://www.rogerclarke.com/II/COSM-1301.html> .

[11] Ibid.

[12] S M Petersen, ‘Loser Generated Content: From Participation to Exploitation’ (March 2008) 13(3) First Monday

<http://firstmonday.org/ojs/index.php/fm/article/view/2141/1948>

R O’Connor, ‘Facebook is Not Your Friend’ (15 April 2012) Huffington Post <http://www.huffingtonpost.com/rory-oconnor/facebook-privacy_b_1426807.html> P J Rey, ‘Alienation, Exploitation, and Social Media’ (April 2012) 56(4) American Behavioral Scientist 399.

[13] G Marks, Why Facebook Is In Decline (19 August 2013) Forbes, <http://www.forbes.com/sites/quickerbettertech/2013/08/19/why-facebook-is-in-decline/> R Cormack, The Decline of Facebook (24 September 2013) Social Media Frontiers, <http://www.socialmediafrontiers.com/2013/09/social-media-news-decline-of-facebook.html> .

[14] D Peppers and M Rogers, The One to One Future: Building Relationships One Customer at a Time (Doubleday, 1993); R Clarke, ‘Privacy, Dataveillance, Organisational Strategy’ (keynote address, I S Audit and Control Association Conference (ISACA/EDPAC ‘96), Perth, 28 May 1996)

<http://www.rogerclarke.com/DV/PStrat.html> R Levine, C Locke, D Searls and D Weinberger, The Cluetrain Manifesto: The End of Business As Usual (Perseus Books, 1999); A Cavoukian and T Hamilton, The Privacy Payoff: How Successful Businesses Build Consumer Trust (McGraw-Hill Ryerson Trade, 2002); R Clarke, ‘Make Privacy a Strategic Factor - The Why and the How’ (October 2006) 19(11) Cutter IT Journal <http://www.rogerclarke.com/DV/APBD-0609.html> .

[15] J Guynn, ‘Privacy watchdog EPIC files complaint against Snapchat with FTC’, Los Angeles Times (17 May 2013) <http://www.latimes.com/business/technology/la-fi-tn-privacy-watchdog-epic-files-complaint-against-snapchat-with-ftc-20130517,0,3618395.story#axzz2krJ3636V> .

[16] Clarke, ‘B2C Distrust Factors in the Prosumer Era’, above n 9.

[17] A Toffler, Future Shock (Pan, 1970) 240-258; A Toffler, The Third Wave (Pan, 1980) 275-299, 355-356, 366, 397-399.

[18] D Tapscott and A D Williams, Wikinomics: How Mass Collaboration Changes Everything (Portfolio, 2006).

[19] I Brown and C T Marsden, Regulating Code (MIT Press, 2013).

[20] A Bruns, From Prosumer to Produser: Understanding User-Led Content Creation (paper presented at Transforming Audiences, London, 3-4 September, 2009).

[21] F D Schoeman (ed), Philosophical Dimensions of Privacy: An Anthology (Cambridge University Press, 1984).

[22] J Hirshleifer, ‘Privacy: Its Origin, Function and Future’ (1980) 9(4) Journal of Legal Studies 649.

[23] D Lindsay, ‘An Exploration of the Conceptual Basis of Privacy and the Implications for the Future of Australian Privacy Law’ [2005] MelbULawRw 4; (2005) 29(1) Melbourne University Law Review 131.

[24] H Nissenbaum, Privacy in Context: Technology, Policy, and the Integrity of Social Life (Stanford University Press, 2009).

[25] R A Posner, ‘The Economics of Privacy’ (May 1981) 71(2) American Economic Review 405; A Acquisti, ‘Privacy in Electronic Commerce and the Economics of Immediate Gratificatio’ (Proceedings of the ACM Electronic Commerce Conference (EC 04) New York, 21-29 <http://www.heinz.cmu.edu/~acquisti/papers/privacy-gratification.pdf> .

[26] W L Morison, Report on the Law of Privacy (Government Printer, 1973); Clarke, ‘Privacy, Dataveillance, Organisational Strategy’, above n 14; R Clarke, Introduction to Dataveillance and Information Privacy, and Definitions of Terms (August 1997) Xamax Consultancy <http://www.rogerclarke.com/DV/Intro.html> R Clarke, What’s ‘Privacy?’ Submission to the Australian Law Reform Commission (July 2006) Xamax Consultancy <http://www.rogerclarke.com/DV/Privacy.html> .

[27] Clarke, ‘Privacy, Dataveillance, Organisational Strategy’, above n 14; Clarke, Introduction to Dataveillance and Information Privacy, and Definitions of Terms, above n 26.

[28] R Clarke, ‘Person-Location and Person-Tracking: Technologies, Risks and Policy Implications’ (paper presented at 21st International Conference on Privacy and Personal Data Protection, Hong Kong, September 1999). Revised version published in (2001) 14(1) Information, Technology & People 206

<http://www.rogerclarke.com/DV/PLT.html> K S Jones, ‘Privacy: What’s Different Now?’ (December 2003) 28(4) Interdisciplinary Science Reviews 287; d boyd, ‘Facebook’s Privacy Trainwreck: Exposure, Invasion, and Social Convergence’ (2009) 14(1) International Journal of Research into New Media Technologies 13; R Clarke and M R Wigan, ‘You Are Where You’ve Been: The Privacy Implications of Location and Tracking Technologies’ (December 2011) 5(3-4) Journal of Location Based Services 138 <http://www.rogerclarke.com/DV/YAWYB-CWP.html> K Michael and R Clarke, ‘Location and Tracking of Mobile Devices: Überveillance Stalks the Streets’ (June 2013) 29(3) Computer Law & Security Review 216 <http://www.rogerclarke.com/DV/LTMD.html> .

[29] D Ellsberg, ‘Edward Snowden: saving us from the United Stasi of America’, The Guardian (online), 10 June 2013

<http://www.theguardian.com/commentisfree/2013/jun/10/edward-snowden-united-stasi-america> .

[30] See, eg, D J Solove, ‘A Taxonomy of Privacy’ (January 2006) 154(3) University of Pennsylvania Law Review 477; D Solove, Understanding Privacy (Harvard University Press, 2008).

[31] M R Wigan and R Clarke, ‘Big Data’s Big Unintended Consequences’ (June 2013) 46(3) IEEE Computer <http://www.rogerclarke.com/DV/BigData-1303.html> .

[32] A Krotoski, ‘Online identity: is authenticity or anonymity more important?’, The Guardian (online), 19 April 2012

<http://www.guardian.co.uk/technology/2012/apr/19/online-identity-authenticity-anonymity/print> .

[33] R L Finn, D Wright and M Friedewald, ‘Seven Types of Privacy’ in S Gutwirth, R Leenes, P de Hert, and Y Poullet, ‘European Data Protection: Coming of Age’ (Springer Science+Business Media, 2013)

<http://works.bepress.com/michael_friedewald/60> .

[34] J E Cohen, ‘A Right to Read Anonymously: A Closer Look at “Copyright Management” in Cyberspace’ (1996) 28 Connecticut Law Review 981

<http://papers.ssrn.com/sol3/papers.cfm?abstract_id=17990> .

[35] G W Greenleaf, ‘IP, Phone Home: Privacy as Part of Copyright’s Digital Commons in Hong Kong and Australian Law’ in L Lessig (ed), Hochelaga Lectures 2002: The Innovation Commons (Sweet & Maxwell Asia, 2003)

<http://papers.ssrn.com/sol3/papers.cfm?abstract_id=2356079> .

[36] European Commission, Proposal for a Regulation of the European Parliament and of the Council on the protection of individuals with regard to the processing of personal data and on the free movement of such data (General Data Protection Regulation), 25 January 2012, COM(2012) 11 final 2012/0011 (COD) <http://ec.europa.eu/justice/data-

protection/document/review2012/com_2012_11_en.pdf>.

[37] R Clarke, Very Black ‘Little Black Books’ (February 2004) Xamax Consultancy <http://www.rogerclarke.com/DV/ContactPITs.html> W Harris, Why Web 2.0 will end your privacy (3 June 2006) bit-tech.net <http://www.bit-tech.net/columns/2006/06/03/web_2_privacy/> S B Barnes ‘A privacy paradox: Social networking in the United States’ (September 2006) 11(9) First Monday <http://firstmonday.org/htbin/cgiwrap/bin/ojs/index.php/fm/article/viewArticle/1394/1312%23> Clarke, above n 3.

[38] Clarke, Very Black ‘Little Black Books’, above n 37.

[39] See, eg, boyd, above n 28; M Helft, ‘Critics Say Google Invades Privacy With New Service’, The New York Times (online), 12 February 2010

<http://www.nytimes.com/2010/02/13/technology/internet/13google.html?_r=1> R Waugh, ‘“Unfair and unwise”: Google brings in new privacy policy for two billion users - despite EU concerns it may be illegal’, Daily Mail (online), 2 March 2012 <http://www.dailymail.co.uk/sciencetech/article-2108564/Google-privacy-policy-changes-Global-outcry-policy-ignored.html> E Bell, ‘The real threat to the open web lies with the opaque elite who run it’, The Guardian (online), 16 April 2012, <http://www.guardian.co.uk/commentisfree/2012/apr/16/threat-open-

web-opaque-elite>.

[40] Google: Gmail users ‘have no legitimate expectation of privacy (13 August 2013) rt.com <http://rt.com/usa/google-gmail-motion-privacy-453/> .

[41] K Bankston, Facebook’s New Privacy Changes: The Good, The Bad, and The Ugly (9 December 2009) Electronic Frontier Foundation

<https://www.eff.org/deeplinks/2009/12/facebooks-new-privacy-changes-good-bad-and-ugly>.

[42] K Opsahl, Facebook’s Eroding Privacy Policy: A Timeline (28 April 2010) Electronic Frontier Foundation <https://www.eff.org/deeplinks/2010/04/facebook-

timeline/>.

[43] G Gates, ‘Facebook Privacy: A Bewildering Tangle of Options’, The New York Times (online), 12 May 2010

<http://www.nytimes.com/interactive/2010/05/12/business/facebook-privacy.html

[44] M McKeon, The Evolution of Privacy on Facebook (May 2010) mattmckeon.com <http://mattmckeon.com/facebook-privacy/> .

[45] d boyd and E Hargittai, ‘Facebook privacy settings: Who cares?’ (July 2010) 15(8) First Monday

<http://firstmonday.org/htbin/cgiwrap/bin/ojs/index.php/fm/article/view/3086/2589> .

[46] BBC, ‘Facebook U-turns on phone and address data sharing’, BBC News (online), 18 January 2011 <http://www.bbc.com/news/technology-12214628> .

[47] N Lankton and D H McKnight, ‘What Does it Mean to Trust Facebook? Examining Technology and Interpersonal Trust Beliefs’ (2012) 42(2) Data Base for Advances in Information Systems 32.

[48] Opsahl, above n 42.

[49] M Shiels, ‘Facebook’s bid to rule the web as it goes social’, BBC News (online), 22 April 2010 <http://news.bbc.co.uk/2/hi/8590306.stm> .

[50] N Carlson, Well, These New Zuckerberg IMs Won’t Help Facebook’s Privacy Problems (13 May 2010) Business Insider <http://www.businessinsider.com/well-these-new-zuckerberg-ims-wont-help-facebooks-privacy-problems-2010-5> .

[51] Adams, above n 7.

[52] P Sprenger, ‘Sun on privacy: Get over it’ Wired (online), 26 Jan 1999 <http://www.wired.com/politics/law/news/1999/01/17538> .

[53] R Esguerra, Google CEO Eric Schmidt Dismisses the Importance of Privacy (10 December 2009) Electronic Frontiers Foundation,

<https://www.eff.org/deeplinks/2009/12/google-ceo-eric-schmidt-dismisses-privacy>.

[54] O’Connor, above n 12.

[55] Clarke, above n 3.

[56] B Schneier, Liars and Outliers: Enabling the Trust that Society Needs to Thrive (Wiley, 2012) particularly ch 4.

[57] B Barber, The Logic and Limit of Trust (Rutgers University Press, 1983).

[58] T Yamagishi, Trust: The Evolutionary Game of Mind and Society (Springer, 2011) 28.

[59] Clarke, ‘Trust in the Context of e-Business’ above n 9; R Clarke, ‘e-Consent: A Critical Element of Trust in e-Business' (Proceedings of the 15th Bled Electronic Commerce Conference, Bled, Slovenia, 17-19 June, 2002)

<http://www.rogerclarke.com/EC/eConsent.html> .

[60] R M Kramer and T R Tyler (eds), Trust in Organizations: Frontiers of Theory and Research (Sage Research, 1996) 4, citing O Williamson, ‘Calculativeness, trust, and economic organization’ (1993) 36 Journal of Law and Economics 453.

[61] For example, M K O Lee and E Turban, ‘A Trust Model for Consumer Internet Shopping’ (Fall 2001) 6(1) International Journal of Electronic Commerce 75.

[62] Clarke, ‘Trust in the Context of e-Business’ above n 9.

[63] M I Melnik and J Alm, ‘Does a Seller’s eCommerce Reputation Matter? Evidence from eBay Auctions’ (September 2002) 50(3) Journal of Industrial Economics 337, 337 <http://www.gsu.edu/~wwwsps/publications/2002/ebay.pdf> .

[64] Ibid 349.

[65] R Clarke, ‘Meta-Brands’ (May 2001) 7(11) Privacy Law & Policy Reporter <http://www.rogerclarke.com/DV/MetaBrands.html> .

[66] R C Mayer, J H Davis and F D Schoorman, ‘An Integrative Model of Organizational Trust’ (1995) 20(3) Academy of Management Review 709.

[67] Lee and Turban, above n 61.

[68] J Salo and H Karjaluoto, ‘A conceptual model of trust in the online environment’ (September 2007) 31(5) Online Information Review 604.

[69] R Clarke, ‘The Willingness of Net-Consumers to Pay: A Lack-of-Progress Report’ (Proceedings of the 12th International Bled Electronic Commerce Conference, Bled, Slovenia, 7-9 June, 1999) <http://www.rogerclarke.com/EC/WillPay.html> .

[70] S Ba and P Pavlou, ‘Evidence of the effect of trust building technology in electronic markets: Price premiums and buyer behavior’ (September 2002) 26(3) MIS Quarterly 243 <http://oz.stern.nyu.edu/rr2001/emkts/ba.pdf> .

[71] D H McKnight and N L Chervany, ‘While trust is cool and collected, distrust is fiery and frenzied: A model of distrust concepts’ (Proceedings of the 7th Americas Conference on Information Systems, Boston, 3-5 August, 2001) 883; D H McKnight and N L Chervany, ‘Trust and Distrust Definitions: One Bite at a Time’ in R Falcone, M Singh and Y-H Tan (eds): Trust in Cyber-societies, LNAI 2246, (Springer-Verlag, 2001) 27; D H McKnight and N L Chervany ‘Distrust and Trust in B2C E-Commerce: Do They Differ?’ (Proceedings of ICEC’06, Fredericton, Canada 14–16 August, 2006).

[72] J Riegelsberger and M A Sasse, Trustbuilders and Trustbusters: The Role of Trust Cues in Interfaces to e-Commerce Applications (Presented at the 1st IFIP Conference on e-commerce, e-business, e-government (i3e) Zurich, 3-5 October 2001) 17-30, <http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.17.8688 & rep=rep1 & type=pdf> .

[73] Clarke, ‘B2C Distrust Factors in the Prosumer Era’, above n 9; R Clarke, ‘The Cloudy Future of Consumer Computing’ (Proceedings of the 24th Bled eConference, June 2011) <http://www.rogerclarke.com/EC/CCC.html> .

[74] For a comprehensive analysis of the categories of ‘persons at risk’, see GFW, Who is harmed by a ‘Real Names’ policy? (2011) Geek Feminism Wiki

<http://geekfeminism.wikia.com/wiki/Who_is_harmed_by_a_%22Real_Names%22_policy%3F> .

[75] Clarke, above n 10.

[76] R Clarke, Privacy Statement Template (2005) Xamax Consultancy

<http://www.rogerclarke.com/DV/PST.html> .

[77] D Wright and P de Hert (eds), Privacy Impact Assessment (Springer, 2012).

[78] Clarke, above n 10.

[79] Kaplan and Haenlein, above n 4, 67.

[80] R D Galliers, ‘Choosing Information Systems Research Approaches’ in R D Galliers (ed), Information Systems Research: Issues, Methods and Practical Guidelines (Blackwell, 1992) 144; R Clarke, ‘If e-Business is Different Then So is Research in e-Business’ (Proceedings of the IFIP TC8 Working Conference on E-Commerce/E-Business, Salzburg, 22-23 June 2001) <http://www.rogerclarke.com/EC/EBR0106.html> W Chen and R Hirschheim, ‘A paradigmatic and methodological examination of information systems research from 1991 to 2001’ (2004) 14(3) Information Systems Journal 197.

AustLII:

Copyright Policy

|

Disclaimers

|

Privacy Policy

|

Feedback

URL: http://www.austlii.edu.au/au/journals/JlLawInfoSci/2014/8.html