University of New South Wales Law Journal

|

Home

| Databases

| WorldLII

| Search

| Feedback

University of New South Wales Law Journal |

|

EXPERT REPORTS AND THE FORENSIC SCIENCES

GARY EDMOND,[*] KRISTY MARTIRE[**] AND MEHERA SAN ROQUE[***]

All Australian jurisdictions regulate the admission of expert opinion evidence. The rules focus on ‘specialised knowledge’, the existence of a ‘field’, and ‘training, study or experience’.[1] They purport to regulate oral testimony but also the expert reports prepared in advance of potential proceedings, which often shape the way charge decisions are made, pleas are negotiated, and cases are settled, prosecuted, defended and occasionally appealed. In recent decades, in conjunction with attempts to enhance the efficiency of legal proceedings, courts have embarked on efforts to regulate the content and disclosure of expert reports through court rules and practice notes. Most of these emerged from judicial concerns about partisanship and the resources consumed by contested expert opinion evidence in civil proceedings. Only later were they extended to criminal proceedings. This article reviews the rules regulating expert reports, particularly around determining guilt in criminal proceedings. Through a detailed review of responses to an expert certificate in a recent trial in NSW, along with a revised report template developed in its aftermath, this article explains the importance of complying with codes of conduct and practice notes and engaging with scientific research and advice.

This article begins with a brief review of rules and standards regulating expert witnesses and their reports or certificates,[2] before moving to critically review a recent challenge to the admissibility of fingerprint evidence in the Children’s Court of New South Wales in JP v Director of Public Prosecutions (NSW).[3] That case included an unusually robust attempt to exclude the evidence of a police latent fingerprint examiner called by the Crown.[4] In reviewing the certificate and oral evidence adduced at trial, as well as the revised reporting template developed in the shadow of the appeal, we draw attention to relevant literatures, perspectives and criticisms that point to the unsatisfactory nature of many of the certificates and reports routinely produced for criminal investigations and routinely relied upon in criminal prosecutions.[5] While it is important to acknowledge that the report template developed in response to the challenge raised by JP v DPP represents an improvement on past practice, this article identifies and explains important limitations. Many of the issues raised in this article are applicable – either directly or indirectly – to other types of forensic science and medicine evidence.[6]

In terms of rules, the High Court has explained on a number of occasions that opinions based on specialised knowledge (so-called ‘expert opinion evidence’) should be presented in a form that enables the decision-maker to determine whether the evidence is admissible:[7]

the provisions of s 79 will often have the practical effect of emphasising the need for attention to requirements of form. By directing attention to whether an opinion is wholly or substantially based on specialised knowledge based on training, study or experience, the section requires that the opinion is presented in a form which makes it possible to answer that question.[8]

This applies not only to testimony, but perhaps more importantly to reports and certificates prepared in the pre-trial stage, given the potential effect such reports may have on defence and prosecutorial decision-making. Compliance with admissibility rules, such as section 79 of the UEL, in these terms is fundamental when adducing the opinions of those presented as experts, because decision-makers – whether judge, jury or counsel – must be placed in a position to rationally evaluate the evidence:[9]

it is a primary duty imposed on experts in giving opinion evidence to furnish the trier of fact with the criteria to enable the evaluation of the expert conclusion: Makita (Australia) Pty Ltd v Sprowles ... The ‘bare ipse dixit’ of a scientist upon an issue in controversy should carry little weight. See Davie v Magistrates of Edinburgh ...[10]

This idea, along with some sense of judicial anxiety about witnesses making unsupported assertions, is reflected in a number of prominent decisions. In Makita, Heydon JA explained that ‘[t]he jury cannot weigh and determine the probabilities for themselves if the expert does not fully expose the reasoning relied on’.[11] It follows that expert reports must be written in a manner that, at the very least, enables a technically proficient individual to make an assessment of the conclusion and the reasoning behind it. Ideally, reports should be written in a manner that enables lay users, namely lawyers, judges and potentially jurors, to understand not only the conclusion or gist but also what was done, the reasoning process and, critically, provide insight into uncertainties and limitations.

Significantly, judges in the Federal Court of Australia have also read these requirements into the terms of section 79. Finding expert reports inadmissible in Ocean Marine Mutual Insurance Association (Europe) OV v Jetopay Pty Ltd, Black CJ, Cooper and Emmett JJ explained:

it is not permissible to conclude, simply because a person expresses an opinion on a particular subject, referring to particular technology, that that person has any specialised knowledge in relation to that subject. There must be specific evidence as to specialised knowledge of the person in relation to that subject and as to the training, study or experience upon which that specialised knowledge is based. ... The further requirement that an opinion be based on specialised knowledge would normally be satisfied by the person who expresses the opinion demonstrating the reasoning process by which the opinion was reached.[12]

Subsequently in Pan Pharmaceuticals Ltd (in liq) v Selim, detailed reports prepared by individuals with extensive experience in the pharmaceutical industry were deemed inadmissible or to lack sufficient probative value because the ‘specialised knowledge’ was not identified and the relationship between the opinion, the specialised knowledge and any ‘training, study or experience’ was not explained.[13] Interestingly, deficiencies in the reports were not necessarily repaired, or said to be reparable, by additional testimony from the author.[14]

Most jurisdictions now have codes of conduct or practice notes regulating expert reports. Not rules of admissibility per se, codes and practice notes are important guides for expert witnesses in criminal and civil proceedings. They explain the duties owed by expert witnesses, what should be included in reports, and are intended to encourage experts to impartially prepare their evidence in order to facilitate legal decision-making. Non-compliance with codes of conduct and practice notes, especially minor omissions or oversights, is unlikely to lead

to the exclusion of evidence.[15] Nevertheless, compliance is generally vital for admissibility gatekeeping and gauging the probative value and weight of expert opinions, and yet the substantive requirements of the codes of conduct and practice notes are often overlooked in practice. Compliance with the terms and spirit of these procedural rules is essential for those engaged in plea and charge decisions, admissibility challenges via section 79 of the UEL, attempts to gauge probative value (even ‘at its highest’) according to sections 135 or 137 of the UEL, decisions about the need to obtain expert advice or to engage a rebuttal expert, decisions about how to cross-examine a witness, or frame directions and warnings under section 165 of the UEL.[16] Below we review the NSW Expert Witness Code of Conduct (‘NSW Code’),[17] the Victorian practice note entitled Expert Evidence in Criminal Trials (‘Victorian Practice Note’)[18] and the Australian Standard entitled Forenics Analysis: Part 4: Reporting (‘Forensic Reporting Standard’).[19]

Applicable to both civil and criminal proceedings, the NSW Code, revealingly, forms part of the Uniform Civil Procedure Rules 2005 (NSW).[20] Slightly revised in 2016, as part of standardization led by the chief justices of Australia, it was based upon a substantially similar practice note used in the Federal Court of Australia.[21] The Federal Court guidelines, developed decades ago in the aftermath of The Ikarian Reefer litigation and an influential review of civil justice in England conducted by Lord Woolf, have also recently been revised.[22]

According to the NSW Code, expert witnesses are engaged for the purpose of ‘providing an expert’s report for use as evidence’ or ‘giv[ing] evidence in proceedings or proposed proceedings’.[23] The expert witnesses’ ‘paramount duty’, ‘overriding any duty to the proceedings’ or retainer is ‘to assist the court impartially on matters relevant to the area of expertise of the witness’.[24] To make this crystal clear, the NSW Code states that an expert witness is ‘not an advocate for a party’.[25] Courts purport to place a premium on the impartial assistance of those with relevant expertise.[26] These obligations should inform interpretation of the NSW Code and the forensic practitioner’s performance;[27] preparing and presenting evidence in certificates, reports and oral testimony.[28]

The NSW Code is not exhaustive, but specifies things that ‘must’ be included in an expert report, many of which support the presentation of the evidence in a form that enables the judge to determine admissibility. Titled ‘Content of report’, clause 3 of the NSW Code states:

Every report prepared by an expert witness for use in court must clearly state the opinion or opinions of the expert and must state, specify or provide:

(a) the name and address of the expert, and

(b) an acknowledgement that the expert has read this code and agrees to be bound by it, and

(c) the qualifications of the expert to prepare the report, and

(d) the assumptions and material facts on which each opinion expressed in the report is based (a letter of instructions may be annexed), and

(e) the reasons for and any literature or other materials utilised in support of each such opinion, and

(f) (if applicable) that a particular question, or matter falls outside the expert’s field of expertise, and

(g) any examinations, tests or other investigations on which the expert has relied, identifying the person who carried them out and that person’s qualifications, and

(h) the extent to which any opinion which the expert has expressed involves the acceptance of another person’s opinion, the identification of that other person and the opinion expressed by that other person, and

(i) a declaration that the expert has made all the inquiries which the expert believes are desirable and appropriate (save for any matters identified explicitly in the report), and that no matters of significance which the expert regards as relevant have, to the knowledge of the expert, been withheld from the court, and

(j) any qualification of an opinion expressed in the report without which the report is or may be incomplete or inaccurate, and

(k) whether any opinion expressed in the report is not a concluded opinion because of insufficient research or insufficient data or for any other reason, and

(l) where the report is lengthy or complex, a brief summary of the report at the beginning of the report.[29]

The NSW Code requires the identification of ‘assumptions and material facts’ grounding opinions as well as the reasons for each opinion.[30] Persisting with common law terminology (eg, ‘field’), it also obliges the expert to specify: the scope of the opinion, if that is relevant; literature and other materials ‘utilised’; and ‘any examinations, tests or other investigations ... identifying the person who carried them out’.[31] Reports should include a declaration that all ‘desirable and appropriate’ inquiries have been made and that ‘no matters of significance ... have ... been withheld from the court’. The report should not omit information or qualifications ‘without which the report is or may be incomplete or inaccurate’. Similarly, if the opinion is constrained by ‘insufficient research or insufficient data or for any other reason’, this must be disclosed. However, in many

respects the requirements remain expressed in subjective terms, depending on

the individual expert’s stated ‘beliefs’, rather than embodying the expectation

of more comprehensive disclosure.[32] The NSW Code also contemplates the possibility of a forensic practitioner changing his or her opinion and the production of a supplementary report.

Finally, the NSW Code refers to expert conferences. Where experts participate in a conference they ‘must exercise ... independent judgment ... and must not act on any instruction or request to withhold or avoid agreement’.[33]

First published in 2014, and reissued in 2017, the Victorian Practice Note represents a more recent and important contribution in this domain.[34] As the title implies, it was specifically drafted for expert evidence in criminal proceedings in Victoria.[35] It serves as a useful comparator. Like the NSW Code and the earlier Federal Court practice note, the Victorian Practice Note imposes ‘an overriding duty to assist the Court impartially, by giving objective, unbiased opinion on matters within the expert’s specialised knowledge’.[36] Notwithstanding recent revisions to the NSW Code in an attempt to enhance harmonisation of procedural rules across jurisdictions, the Victorian Practice Note diverges in several significant ways, all of which represent an improvement on the NSW Code.

First, the Victorian Practice Note explains its purpose, namely, ‘to enhance the quality and reliability of expert evidence relied on by the prosecution and the accused in criminal trials’.[37] There is an explicit focus on quality and reliability in order to enhance the value of evidence and the efficiency of criminal proceedings.

Secondly, the Victorian Practice Note is more insistent and directed than the NSW Code. The Note employs the language of the Evidence Act 2008 (Vic) while reinforcing the centrality of impartiality. Paragraph 4.1, following federal and NSW courts, refers to expectations around impartiality, ‘objective’ and ‘unbiased’ performances, but also incorporates ‘specialised knowledge’ from section 79 of the UEL. This insistence is also found in the more detailed expectations in relation to the kinds of information that will inform understandings of quality, validity, reliability and probative value in the provisions specifying the content of reports. In addition to the requirements set out in the NSW Code, paragraph 6.1 of the Victorian Practice Note requires expert reports to disclose:

(i) any qualification of an opinion expressed in the report, without which the report would or might be incomplete or misleading;

(j) any limitation or uncertainty affecting the reliability of

(i) the methods or techniques used; or

(ii) the data relied on –

to arrive at the opinion(s) in the report; and

(k) any limitation or uncertainty affecting the reliability of the opinion(s) in the report as a result of –

(i) insufficient research; or

(ii) insufficient data.

The additional requirements in (j) and (k) are more directed and informative than the diffuse expectations expressed in the NSW Code. The Victorian Practice Note requires the impartial and unbiased witness to disclose any qualification that if omitted might lead to an ‘incomplete or misleading’ report. Interest in ‘reliability’, emphasised in the purposes section, reappears among the specific obligations. Whereas the NSW Code refers to the need to disclose if an opinion is not ‘concluded’, the Victorian version emphasises the obligation to disclose ‘any limitation or uncertainty’ that affects the reliability of the opinion, and thus might influence the interpretation of the report. The Victorian Practice Note makes it clear that there is an obligation to address limitations or uncertainty at the level of method and technique as well as limitations in data and/or research.[38]

Thirdly, and significantly, the Victorian Practice Note requires the expert to disclose the existence of controversy. Paragraph 6.2 states that if the expert is aware of any ‘significant and recognised disagreement or controversy’ that is ‘directly relevant to the expert’s ability, technique or opinion, the expert must disclose [its] existence’. Where there is non-trivial controversy or criticism there is an onus on the expert to disclose, even where they find the concerns unpersuasive or believe they do not materially affect the opinion. This is an objective standard. Where there is some non-trivial controversy or dispute that touches upon the evidence or the assumptions, underlying methods, interpretation or expression of results, the Note requires the report to draw it to the attention of the non-expert audience.[39]

Finally, the Victorian Practice Note refers to and applies to different kinds of reports. The Note applies to all reports that a party proposes to rely on in court, including primary reports and ‘responding’ reports, and also enables the accused to request that the prosecution obtain additional reports addressing a specific matter in dispute.[40]

As we shall see, the Victorian Practice Note is better suited to the provision and evaluation of expert evidence in criminal proceedings than NSW and federal equivalents. Nevertheless, it does not direct explicit attention to issues that are fundamental to a great deal of forensic science and medicine evidence, namely validity, error rates, human factors (eg, cognitive bias) and demonstrable proficiency.[41]

In this context it is also useful to draw attention to another important – though largely neglected – resource: the Forensic Reporting Standard, published in 2013.[42] The Standard lists most of the features required by the NSW Code and Victorian Practice Note, but also places emphasis on clarity, technical review, limitations and some dangers. Interestingly, the Standard is not referenced in most expert reports prepared by forensic practitioners in Australia. This omission appears to have much to do with an apparent reluctance, or inability, to comply with its strictures. This might be considered curious given that those responsible for the industry-oriented Australian Standards were employed by, or representing, Australian police services and other forensic science bodies.[43] NSW Police were represented on the relevant Australian Standards committee and the Forensic Services Group were consulted during drafting.

The Forensic Reporting Standard rewards reading, though for our purposes a few requirements warrant specific attention. The Standard places emphasis on the need for clarity and transparency in both what was done and what was concluded, but also ‘the limitations associated with the process’. These are provided for in the ‘General Provisions’:

In all instances, the author of a forensic report shall be concerned solely with reporting the results and opinions based on forensic examinations. Reports should be clear to the reader, so that it is readily understood what was done, what was concluded and the limitations associated with the process.[44]

Limitations are explicitly revisited in clause 9.4 of the Standard:

Known limitations of the methods or procedures and results and opinions used should be stated clearly and unambiguously in the report or an appendix attached to the report. This may include references to authoritative and critical literature, whether the method has been validated, and known error rates where available and relevant.[45]

The Standard refers to two types of review for reports, specifically ‘administrative review’ and ‘technical review’. Administrative review is a form of editorial review that may not involve any checking of technical data, results or opinions.[46] Technical review is of a different order:

The technical review shall include all observations, results and opinions in the case notes, to ensure their validity. Where a report has been subjected to technical review, evidence of the review shall be maintained.

All technical reviews shall be completed by a peer or authorized person. ... Where such technical review is not possible, the report shall contain a disclaimer to the effect that such review was not carried out.

Results or opinions on which agreement has been reached between the examiner and the reviewer may be included as results or opinions in the contents of a report.

If agreement between the examiner and the reviewer cannot be reached, an additional independent review may be conducted. Facilities shall have documented policies on the reporting of findings where a dispute exists between different examiners as to the evaluation of data or observations or the interpretation of results.

Disagreement between a reviewer and examiner shall be recorded.

The final decision as to the reported findings shall rest with the examiner when both of the following conditions are met:

(a) All relevant documented experimental protocols have been followed.

(b) Release of the disputed finding has been approved by the facility director.[47]

Self-evidently, the Forensic Reporting Standard descends into more detail than its legal counterparts. Technical review includes an assessment of ‘all observations, results and opinions in the case notes, to ensure their validity’. Not only does the Standard, written specifically for the forensic sciences, refer to formal processes of review, but it countenances the possibility of disagreement between forensic practitioners and those technically reviewing reports. Curiously, the Standard seems designed to leave decisions about whether to report disagreement to forensic examiners.[48]

Clause 9.1 lists things that should be included in a forensic science report. Most of these resonate with the requirements of the NSW Code and Victorian Practice Note. Clauses 9.2 and 9.3 respectively, refer to ‘Collection and continuity of forensic material’ and ‘Analysis and comparison of material’. Clause 9.5 addresses the ‘Reporting of opinions’:

Where professional judgment is involved in evidence collection, examination and/or analysis, opinions shall be differentiated from other sections of the report that may deal with factual observations, for example, scene description or continuity of possession.

Opinions derived from the interpretation of results should form part of the report’s conclusion. The conclusion should clearly outline the basis on which each opinion is formed and the process by which it is derived.

The author of an opinion shall take into account all relevant observations and results from the examinations and/or analyses. Care should be taken not to overstate or understate the value of any observations or testing carried out.

Opinions should not be presented in such a way as to overemphasize or underemphasize their certainty. The possibility of alternative explanations should be acknowledged. ...

If a client expressly requests an examiner to evaluate the results of their examinations in a particular context, then the context and the circumstances of the request shall be clearly stated in the report.

The report should clearly state which tests form the basis for an opinion.

Any reasonable, alternative explanations or opinions should be included together with reasons for their rejection or lower ranking.

Here we can discern the need to: distinguish opinions from other parts of the report; avoid overstating or understating the value of opinions; explain the basis for each opinion; carefully consider the information accessed; and, document what the forensic practitioner was given and told. There is, in addition, a need to consider reasonable, alternative explanations as well as reasons for their rejection or ranking.

The Standard descends into even more specificity for opinions linking a trace (eg, DNA, a fingerprint, a bullet casing or fibre) to a particular person or source: ‘common source’ attributions. Clause 9.5 continues:

Caution should be used in making common source opinions. When expressing opinions regarding a common source, examiners should –

(a) be aware of the logical objections to absolute individualization;

(b) be familiar with the relevant literature concerning the use of statistics in their field of expertise;

(c) avoid statements that cannot be supported by appropriate scientific testing; and

(d) recognize that opinion evidence is subjective in the sense that it entails professional judgement.

A result that, when interpreted, tends to support or tends to refute a hypothesis, should not be reported in such a way as to appear neutral.

These stipulations reveal concerns about opinions presented by those in the pattern recognition or comparison ‘sciences’ but the resulting expectations are to some extent incoherent. For example, the Forensic Reporting Standard appears to: recognise the logical impossibility of individualisation (in (a), in part reflected in the probabilistic approach applied to DNA evidence); require that statements, including those that positively identify (or individualise), must be ‘supported by appropriate scientific testing’ (in (c), notwithstanding the ‘logical objections’); and require those making illogical and unsubstantiated individualisations to be familiar with relevant statistical literatures that question the legitimacy of positive identification.[49] Rather than provide clear guidance, the Standard suggests the need for caution where forensic practitioners express opinions linked to a ‘common source’ as in the case of latent fingerprint evidence. Overall, we should expect disclosure and justification where forensic practitioners are engaged in ‘common source’ attributions.

In summary, the NSW Code, Victorian Practice Note and Forensic Reporting Standard impose obligations on forensic practitioners preparing reports for criminal proceedings that go beyond broad-brush commitments to nonpartisanship. They demand more than a statement that the practitioner agrees to be bound by the relevant rules. They offer a scheme intended to enhance the way lawyers, judges and other decision-makers understand and evaluate expert opinion evidence.

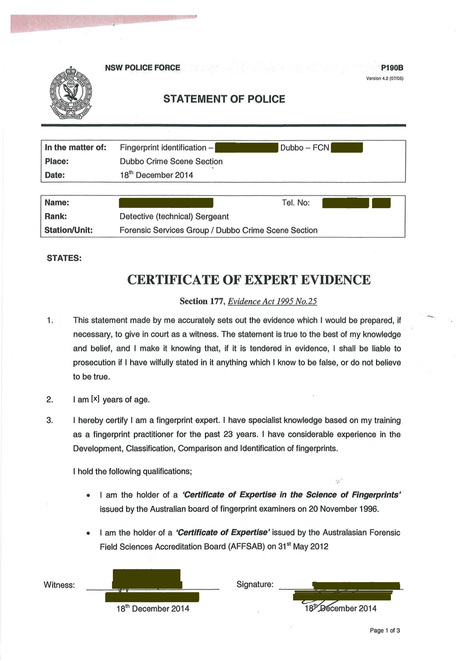

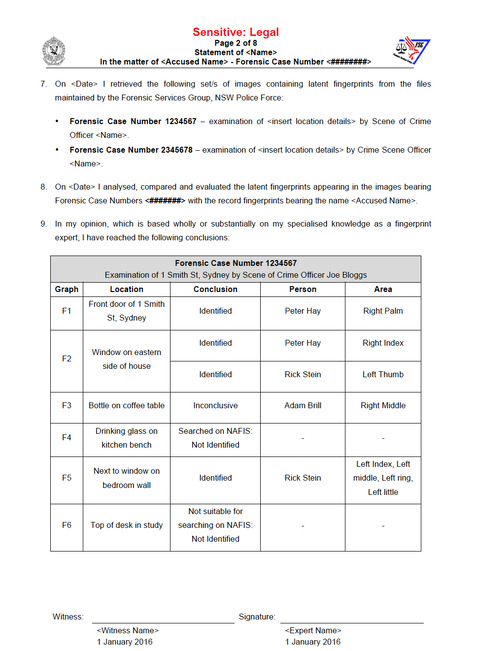

We now turn to consider an expert certificate relied upon in a recent prosecution based exclusively on latent fingerprint evidence.[50] More than a century after the High Court confirmed the admissibility of latent fingerprint evidence in Parker v The King there was a challenge to fingerprint evidence in JP v DPP.[51] JP, a minor, was tried and convicted of an aggravated break and enter in the Children’s Court in Dubbo. The case against JP was circumstantial, based entirely on him being ‘identified’ by a single latent fingerprint recovered from the scene, attributed to JP’s left thumb.[52] This was an individualisation or ‘common source’ attribution. The positive ‘identification’ was made by a NSW Police latent fingerprint examiner based in Dubbo.[53] On the basis of his assessment of the prints, the examiner produced an expert certificate, reproduced at the end of this article as Appendix I. The defence challenged the admissibility of the fingerprint evidence, including the certificate, at trial. Notwithstanding an unusually well-prepared challenge, the certificate and the examiner’s opinion were deemed admissible and relied upon to satisfy the magistrate beyond reasonable doubt that JP was responsible for the break and enter.[54] The sufficiency and admissibility of the fingerprint evidence, along with JP’s conviction, were subsequently raised in an unsuccessful appeal.[55]

JP v DPP was by no means the only challenge to the admissibility of fingerprint evidence in the decades following Parker v The King, although it is one of surprisingly few challenges, and the first to have drawn upon recent reviews that question some of the methods, practices and claims associated with fingerprint comparison.[56] The materials generated in JP v DPP, along with the production of a revised report template in its aftermath, afford a particularly good opportunity to critically examine the adequacy of reports and certificates produced routinely by state-employed forensic practitioners.

The expert certificate prepared for the investigation and used in the prosecution of JP is short and opaque. While it might be argued that it complies with the apparently minimal requirements of section 177 of the UEL, its form is inconsistent with the expectations expressed in the UCPR and the NSW Code. It is hard to imagine how a magistrate or judge could have determined whether the opinion was substantially based on ‘specialised knowledge’, and whether the knowledge was based on ‘training, study or experience’ for the purposes of section 79(1) of the UEL, let alone the value of the examiner’s conclusion. Rather than provide insight into the process and the reasoning, the certificate presents a small target. In the examiner’s opinion two different fingerprints – a latent fingerprint recovered from the crime scene and one in a police database – ‘matched’ and were therefore produced by the same person, namely JP. Apart from the bare references to retrieval and comparison, the certificate provides very little insight into what was done, what procedures were used and what standards applied. There are no references to limitations or uncertainties, no recognition of even the possibility of error, and no discussion of controversies. It is important to emphasise, given the legal response to the defence challenge, that the expert certificate prepared for the case is not unrepresentative.

Considering the admissibility of the expert opinion and the suitability of the certificate on appeal in the Supreme Court, Beech-Jones J found it to be inadequate:

nowhere in the certificate was there any statement of what [the] examination revealed. Instead there was simply a statement of the ultimate opinion formed ...[57]

I consider that the certificate provided by the prosecution’s fingerprint expert did not provide any reasoning sufficient to support the admissibility of the expert’s opinion, but the oral evidence of the expert rectified that discrepancy.[58]

Surprisingly, the inadequacies were said to be repaired by the answers supplied through the cross-examination undertaken by defence counsel.[59] We return to this issue in Part VI.

The outcome in JP v DPP throws the kinds of predicament facing defence counsel into sharp relief. On one hand, if admissibility is not challenged the evidence would be admitted and very likely relied upon, thereby foreclosing avenues of appeal. On the other hand, challenging the evidence, according to the judicial officers in JP v DPP, provided a corrective that rendered the certificate and oral evidence admissible. The limitations and frailties with the fingerprint identification procedure, the conditions under which it was undertaken, the nature of the conclusion, the examiner’s proficiency, and most significantly controversy within the domain, were not in the end disclosed by the Crown or addressed substantively by either of the judicial officers involved. At the trial level, the Court was not equipped to address fundamental issues raised by the defence. On appeal, relevant scientific literature was not before the Court. Nevertheless, both courts expressed a preference for the status quo: finding the positive identification evidence admissible and capable of suppoting guilt beyond reasonable doubt. Both judicial officers accepted that the witness remained ‘unshaken’ when confronted with authoritative scientific criticism.[60]

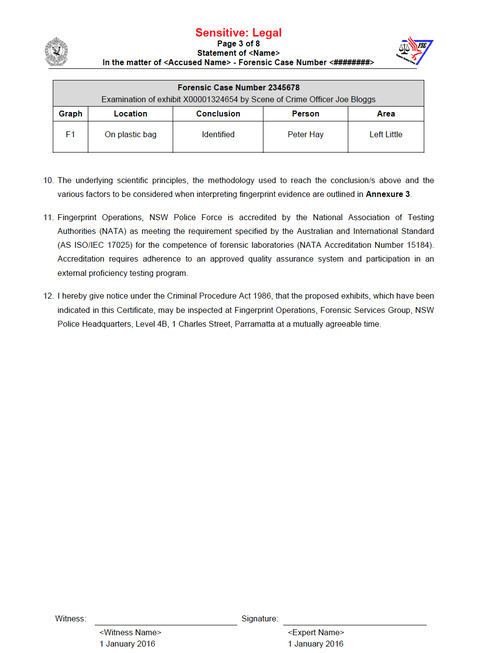

In response to the impending appeal in JP v DPP, NSW Police latent fingerprint examiners began to revise their certificates and reports.[61] The new template (‘Revised Certificate’) is reproduced at the end of this article, as Appendix II.[62]

The first thing to say about the Revised Certificate template is positive. It represents an improvement on what preceded it. Most Australian judges would presume this sort of certificate compliant with relevant procedural rules and deem derivative oral testimony admissible. We should, however, not get carried away with the likelihood or significance of legal endorsement. Considering the longevity and breadth of use, there have been remarkably few sustained challenges to the admissibility or probative value of latent fingerprint evidence.[63] After all, prosecutors have adduced and most courts have admitted the kind of non-compliant certificate tendered in JP v DPP, and similar reports from other forensic domains, for decades. In a large number of cases, where the identity of the offender was in issue, deficient reports passed without objection or judicial comment. The following discussion brings admission and legal credulity into focus. Rather than critical engagement, via cross-examination and sceptical legal norms, prosecutors, judges and defence counsel have, by and large, been acquiescent or complicit in the production, admission and reliance upon inadequate certificates and exaggerated opinions.[64]

At this point we turn to consider this Revised Certificate and, by implication, its predecessors in a little more detail. We are particularly interested in whether the Revised Certificate complies with the terms and spirit of the NSW Code and Forensic Reporting Standards, along with the degree to which it enables a reader to comprehend and evaluate the opinions offered by latent fingerprint examiners.

While the Revised Certificate represents an improvement on past practice there are still very significant problems. These include the description of the underlying procedure (ie, Analysis, Comparison, Evaluation, and Verification (‘ACE-V’)), the level of detail provided, the explanation of the reasoning, and the strength of the opinion expressed.[65] Most troublingly, the value of the evidence and the abilities of latent fingerprint examiners are exaggerated and serious limitations, uncertainties and controversies are not disclosed. Notwithstanding the provision of more descriptive information, those reading the Revised Certificate or hearing testimony based upon it are not placed in a position to rationally assess the putative expertise, the probative value or weight of the opinion.

Our first concern is with the way in which the Revised Certificate explains and frames the ‘methodology’ employed by latent fingerprint examiners. At a general level, the Revised Certificate asserts that:

The ACE-V methodology, as applied by qualified, practising fingerprint experts, has been subject to extensive research and validation studies and has been shown to be highly accurate, reliable and repeatable.[66]

In recent reviews of latent fingerprint evidence, conducted by the US National Academy of Sciences (‘NAS’), the US National Institute of Standards and Technology (‘NIST’) and the President’s Council of Advisors on Science and Technology (‘PCAST’), the allegedly straightforward and efficacious nature of ACE-V was authoritatively questioned. The NAS Report states:

ACE-V provides a broadly stated framework for conducting friction ridge analyses. However, this framework is not specific enough to qualify as a validated method for this type of analysis. ACE-V does not guard against bias; is too broad to ensure repeatability and transparency; and does not guarantee that two analysts following it will obtain the same results. For these reasons, merely following the steps of ACE-V does not imply that one is proceeding in a scientific manner or producing reliable results. A recent paper by Haber and Haber presents a thorough analysis of the ACE-V method and its scientific validity. Their conclusion is unambiguous: ‘We have reviewed available scientific evidence of the validity of the ACE-V method and found none’.[67]

Summarising the implications of the dearth of systematic study on the pattern matching or identification ‘sciences’ – that is, those involving common source attributions – the NAS Report embodies the concerns of attentive scientists, in terms unprecedented for their directness and critical tone:

With the exception of nuclear DNA analysis, however, no forensic method has been rigorously shown to have the capacity to consistently, and with a high degree of certainty, demonstrate a connection between evidence and a specific individual or source.[68]

Problems exposed through these reviews, many unanswered, were familiar to the latent fingerprint examiners responsible for drafting the Revised Certificate. These reviews and others cast latent fingerprint analysis, and the ‘method’ purportedly supporting it, in a light significantly less favourable than suggested in the Revised Certificate. Consequently, presenting ACE-V as a rigorous method capable of producing accurate identifications and effectively eliminating errors misrepresents what is known. It is not an impartial representation. The Revised Certificate misrepresents, through exaggeration and omission, both the value of ACE-V and its ability to eliminate errors, as well as the capacity of the available research to support the validity of the ACE-V ‘method’.

Only in the last few years have the first serious attempts to evaluate the performance of latent fingerprint examiners started, following notorious misattributions by FBI examiners who were applying ACE-V. In the Mayfield case the procedure resulted in three mistaken verifications.[69] Scientific studies in the US and Australia have found that qualified latent fingerprint examiners possess impressive abilities at matching and discriminating between prints. Studies by Ulery, Tangen and colleagues confirmed that latent fingerprint examiners are accurate, and are more accurate than laypersons.[70] However, the recent studies all reported small – though non-trivial – numbers of errors.[71]

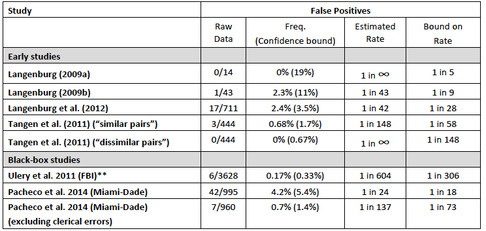

All of the available studies were recently reviewed and summarised by PCAST,[72] which produced the following table:

Table 1: Error Rates in Studies of Latent Print Analysis.[73]

These studies and reviews represent the state of the science. This ‘state of the science’ is not consistent with the summary included in the Revised Certificate. These studies have not validated ACE-V, particularly its application.[74] Rather, the initial studies were concerned with examiners’ willingness and ability to correctly discriminate between fingerprints. That is, scientists have only tested the most fundamental components of fingerprint comparison. Significantly, the claim that ACE-V is valid and effective at eliminating errors is not supported by scientific research.[75]

Examiner recourse to scientific literature and the (strategic) deployment of scientific studies in reports and testimony raises two additional issues, worth considering in a section on methods and ACE-V. The first concerns the ‘training, study or experience’ of latent fingerprint examiners. Many latent fingerprint examiners, most conspicuously those employed by state police services, do not possess formal scientific qualifications. Technically, they are not forensic scientists.[76] While we accept that certified latent fingerprint examiners have demonstrable abilities at comparing prints, their expertise may not extend to research methods, statistics and probabilities, or cognitive science, in order to mitigate contextual biases. This means that where methods and practices are challenged, latent fingerprint examiners and other ‘technicians’ may not be competent to responsibly answer questions and criticisms.[77] Recall the NSW Code’s insistence that experts should not transgress the ‘field of expertise’.[78] The Forensic Reporting Standard cautions them not to ‘knowingly go beyond their area of expertise’.[79]

Breach of these fundamental strictures passes without critical comment in JP v DPP. The examiner ‘conceded that he had not read a lot of the literature referred to by [counsel] in the cross-examination’.[80] Nevertheless, ‘he maintained his view that if the protocol [ie,‑ ACE-V] was followed properly it should not involve bias or incorrect assessment’.[81] The position adopted by the examiner, and supported by the magistrate and judge on appeal, is at odds with the best scientific research and advice. The ‘protocol’ does not necessarily protect against bias, error or inconsistency. Here we can observe a legally-recognised expert, apparently oblivious to or unwilling to engage with the detail of critical reviews by independent and authoritative scientific and technical organisations, and apparently unfamiliar with the detail of supportive research by Ulery et al and Tangen, Thompson and McCarthy, adhering to personal beliefs and impressions.

The second point is that all of the latent fingerprint groups in Australia are aware of the NAS Report, the NIST Report, the Fingerprint Inquiry Report as well as a range of other studies and commentaries. The decision to omit criticisms and even references to these reports from the Revised Certificate is deliberate.[82]

A related deficiency is the failure to explain the reasoning process that has been used by the fingerprint examiner. This was at the centre of the challenge in JP v DPP and yet even the Revised Certificate provides only a schematic overview of the components of ACE-V. The results of analysis, comparison and evaluation, and the specific decisions – around the features observed, their number, any ‘distortion[s]’, and apparent (ie, superficial) differences as well as identifications and non-identifications are either omitted or asserted rather than explained.[83] This appears inconsistent with the requirements of section 79 of the UEL as well as Makita and Ocean Marine v Jetopay, the NSW Code, the Victorian Practice Note and the Forensic Reporting Standard.

We are not provided with information about: how an examiner determines whether a print is sufficient for analysis and comparison; how the search on the National Automated Fingerprint Identification System (‘NAFIS’) was conducted and whether there was more than one; the type and number of features observed, and how these led to the particular decision – whether ‘Identified’, ‘Inconclusive’, ‘Not identified’ or not suitable for searching.[84] The Revised Certificate does not incorporate or refer to marked-up images (or an expectation that these will be made available to the defence). The Revised Certificate only provides information as to the conclusions of the named expert. We are told about a form of review by way of the general description of the procedure contained within annexure 3, but the Revised Certificate does not indicate who performed the review, what it involved and the circumstances in which it took place. Conclusions remain declaratory; they resemble ‘bare ipse dixit’.[85]

The Revised Certificate describes the ACE-V process in simplistic terms, but there is no explanation of the actual practices or interpretation in the Revised Certificate. There is no indication as to whether ACE-V is undertaken sequentially.[86] Was, for example, ‘Analysis’ conducted before ‘Comparison’, in order to reduce vulnerability to contextual bias? Were the results of each stage documented sequentially? Was ‘Verification’ blind and did the ‘Verification Expert’ agree with the ‘Evaluation’?[87] None of this is discernible. Finally, the conclusions are reported in a tabular form and contain only a single reference to the conclusions being ‘opinion’ (important in the NSW Code, Victorian Practice Note and Forensic Reporting Standard) rather than ‘statement[s] of fact’.[88]

The simplistic description of the ACE-V ‘method’ belies the variability inherent in its operation. The available research suggests that analysis, comparison, evaluation and verification is much more variable than implied by the outline of ACE-V presented in the Revised Certificate.[89] There are few, if any, standards or guidelines regulating practice. What is a feature and how many features or combinations of features are required to call a latent print sufficient for analysis, and to identify or exclude? What makes a latent print too distorted for analysis?[90] When can anomalies be considered to be caused by distortion or some other interference? How much variation should be tolerated? The lack of standardisation is inconsistent with the advice of independent scientific reviewers and likely to introduce uncertainty and increase (subjective) variation and error in fingerprint comparison and evaluation regardless of whether these are reported.[91]

The Revised Certificate tends to extrapolate from relatively limited research on the capacity of (some) latent fingerprint examiners to differentiate between prints to general conclusions as to the validity and accuracy of ACE-V. A corollary of this is the manner in which the Revised Certificate deals with error. In approaching limitations, uncertainty and errors, it is useful to reproduce the relevant section, revealingly titled ‘Potential for Error’:

The comparison of fingerprint impressions is a task conducted by humans, and subsequently there exists a potential for error. However, studies have demonstrated that qualified, practicing fingerprint experts are ‘exceedingly accurate’ when performing fingerprint identifications. To mitigate risk of error, NSW Police Force – Forensic Services Group incorporates strict peer review practices requiring independent verification of fingerprint identifications by a minimum of one appointed verification expert. My conclusion(s) is not a statement of fact, but one of expert opinion.[92]

In its review of the forensic sciences, the NAS Report adopted a more concrete approach to error and uncertainty:

Few forensic science methods have developed adequate measures of the accuracy of inferences made by forensic scientists. All results for every forensic science method should indicate the uncertainty in the measurements that are made, and studies must be conducted that enable the estimation of those values.[93]

Subsequently, following a review of the surprisingly sparse scientific research, PCAST concluded that:

it would be appropriate to inform jurors that (1) only two properly designed studies of the accuracy of latent fingerprint analysis have been conducted and (2) these studies found false positive rates [ie, misidentifications] that could be as high as 1 in 306 in one study and 1 in 18 in the other study. This would appropriately inform jurors that errors occur at detectable frequencies, allowing them to weigh the probative value of the evidence.[94]

Provision of this information is fundamental because misidentification (ie, the ‘false positive rate’) ‘is likely to be higher than expected by many jurors based on longstanding claims about the infallibility of fingerprint analysis’.[95]

Error is not taken seriously in the Revised Certificate. It is not treated as a real and ubiquitous feature of fingerprint comparison.[96] There is no indication of its incidence or magnitude. Rather, it is represented as something that is implicitly abstract and remote – merely ‘potential’. In the Revised Certificate, the remote possibility of error is attenuated by a misleading reference to the accuracy of fingerprint examiners, recourse to ‘independent verification’, and characterising identification evidence as opinion rather than fact.[97] We address claims about the value of verification in more detail in Part V(F). In the present section we consider the Revised Certificate’s failure to engage with error in the empirical terms implored by the NAS along with the incidental references to the evidence being opinion.[98]

The main limitation is the trivialisation of the real risk of error. Not only is error characterised as abstract and remote, the abilities of examiners in conjunction with their ‘method’ are presented as eliminating even the remote possibility. But, as we have seen in the independent reviews and formal studies, there is little evidence that ACE-V actually reduces or eliminates error. Responding to the submissions of fingerprint examiners, the NAS Report concluded that: ‘Although there is limited information about the accuracy and reliability of friction ridge [ie, fingerprint] analyses, claims that these analyses have zero error rates are not scientifically plausible’.[99]

The Revised Certificate misrepresents the conclusion from Tangen, Thompson and McCarthy cited in support of the accuracy of fingerprints. The relevant paragraph from their conclusions is reproduced below:

We have shown that qualified, court-practicing fingerprint experts are exceedingly accurate compared with novices, but are not infallible. Our experts tended to err on the side of caution by making errors that would free the guilty rather than convict the innocent. Even so, they occasionally made the kind of error that can lead to false convictions. Expertise with fingerprints appears to provide a real performance benefit, but fingerprint experts – like doctors and pilots – make mistakes that can put lives and livelihoods at risk.[100]

The bare claim that fingerprint examiners are ‘exceedingly accurate’ is a partial and potentially misleading use of the findings from this independent scientific research.[101] Significantly, the elision of error and fallibility occurs in the section of the Revised Certificate purporting to deal with the subject of error. Actual cases, as well as findings by Tangen, Thompson and McCarthy and Ulery et al (reported by PCAST), confirm that latent fingerprint examiners make small numbers of errors, including ‘false alarms’ (or misidentifications).[102]

By failing to genuinely engage with uncertainties and error, the NSW Police latent fingerprint examiners have not provided decision-makers with means

of evaluating their reports and testimony.[103] While trained and certified latent fingerprint examiners obviously possess genuine expertise – demonstrated in their objectively high accuracy, and accuracy relative to novices – the only independent studies available reported mistakes. Consequently, without insight into actual abilities and general levels of performance, how is the decision-maker to determine whether to accept a particular opinion? How is the decision-maker in JP v DPP to determine whether this opinion evidence can support proof beyond reasonable doubt?[104] The Revised Certificate does not place the decision-maker in a position to rationally evaluate the opinion.[105] In the absence of genuine engagement with error and its incidence, the Crown always gets the benefit of a process that is misleadingly characterised as effectively error free.

The other issue emerging in annexure 3 to the Revised Certificate is the declaration that any identification (or exclusion and so on) is merely an opinion. According to the NSW Code and the Forensic Reporting Standard, this should be made explicit in the text where the opinion (ie, the identification) is expressed. While the change in nomenclature from ‘fact’ to ‘opinion’ is appropriate, and consistent with the recommendations of the Fingerprint Inquiry Report, merely characterising a conclusion as opinion does not address validity, uncertainty, limitations or error. Conceding that something is an opinion does not mean that its probative value will be appropriately discounted by non-technical audiences. Moreover, it does not provide a means of gauging probative value or weight.[106]

One of the problems that plagued the original certificate in JP v DPP and the oral testimony that followed, is that apparent matches between fingerprints were equated with identification in categorical terms. In JP v DPP, the match was characterised as positive identification (or individualisation) of JP. The Revised Certificate maintains this categorical approach in its terminology.[107]

According to the NIST Report:

a fingerprint identification was traditionally considered an ‘individualization,’ meaning that the latent print was considered identified to one finger of a specific individual as opposed to every other potential source in the universe. However, the recent attention focused on this issue reveals that this definition needlessly claims too much, is not adequately established by fundamental research, and is impossible to validate solely on the basis of experience.[108]

The original certificate, oral testimony and Revised Certificate all ‘needlessly claim too much’. Identification or individualisation is inconsistent with the best scientific advice, as well as practices in analogous comparison procedures that have been formally validated – eg, DNA profiling. Scientifically-based DNA profiling evidence is, for example, reported in probabilistic terms or as a likelihood ratio derived from the application of population statistics to genetics. The results of DNA testing are almost never reported in terms of positive identification. With latent fingerprints, in contrast, we have a ‘protocol’ that is not standardised, let alone appropriately validated, and yet courts allow latent fingerprint examiners (many without scientific training and qualifications) to express their subjective interpretations as positive evidence of identity and to reject any real possibility of error.

Independent scientists and statisticians who have reviewed the procedures used by latent fingerprint examiners have advised against positively identifying persons in most circumstances.[109] Recommendation 3.7 of the NIST Report states:

Because empirical evidence and statistical reasoning do not support a source attribution to the exclusion of all other individuals in the world, latent print examiners should not report or testify, directly or by implication, to a source attribution to the exclusion of all others in the world.[110]

That is, in most cases, latent fingerprint examiners should not attribute latent fingerprints to a specific individual. In the Fingerprint Inquiry Report, Sir Anthony Campbell recommended that ‘[e]xaminers should discontinue reporting conclusions on identification or exclusion with a claim to 100% certainty or on any other basis suggesting that fingerprint evidence is infallible’.[111]

These authoritative recommendations are neither adopted nor disclosed in the Revised Certificate.[112]

The challenge in JP v DPP included some discussion of the potential for human factors (such as confirmation and contextual biases) to influence the decisions of latent fingerprint examiners. The defence pointed to the limited nature of the comparison the examiner was asked to perform, as well as the fact that he was already aware that the fingerprint had been linked to JP when undertaking his assessment.[113] The Revised Certificate provides more detail than the original certificate, but it does not address vulnerabilities arising from human factors.

Vulnerabilities from human factors, such as suggestion, anchoring and reliance on recollection of the frequency of features, are not ‘hypothetical’.[114] Scientists and biomedical researchers are routinely blinded to avoid notorious dangers, particularly suggestion. Formal training and extensive experience do not enable scientists to resist a range of insidious cognitive influences. This is one of the reasons that most clinical trials are double-blinded. Neither the treating doctors nor the patients are aware of who is receiving the test drug and the comparator or placebo. The US National Commission of Forensic Sciences, established after the NAS Report, explained:

Contextual bias is not a problem that is unique to forensic science. It is a universal phenomenon that affects decision making by people from all walks of life and in all professional settings. People are particularly vulnerable to contextual bias when performing tasks that require subjective judgment and when they must rely on data that are somewhat ambiguous.

Studies show that the contaminating impact of contextual bias can occur beneath the level of conscious awareness. This finding means that contextual bias is by no means limited to cases of misconduct or bad intent. Rather, exposure to task-irrelevant information can bias the work of FSSPs [forensic science service providers] who perform their job with utmost honesty and professional commitment. Moreover, the nonconscious nature of contextual bias also means that people cannot detect whether they are being influenced by it. It follows that task-irrelevant information can bias the work of FSSPs even when they earnestly and honestly believe they are operating with utmost objectivity.[115]

It is difficult to reconcile the systematic use of blinding procedures in mainstream scientific and biomedical research and the widespread insensitivity to the same risks in the day-to-day work of forensic practitioners.

Scientists reviewing the forensic sciences have repeatedly recommended the use of blinding – as early and for as long as possible.[116] This means exposing forensic practitioners only to information that is required to successfully perform their analysis – such as the provision of prints and maybe information about the surfaces on which the prints were located, along with the methods used to collect and enhance them, for example.[117] This is often described as task- or domain-relevant information. Latent fingerprint examiners do not need to know about other evidence such as a confession, the opinions of police officers about the identity of the offender, the type and seriousness of offence, the suspect’s criminal record or address, and so on. Indeed, exposure to these kinds of gratuitous information has the potential to contaminate decisions – especially where the interpretive task is difficult.[118]

The NAS Report places emphasis on the need to study and reduce the

threats posed by contextual bias.[119] The Fingerprint Inquiry Report made three recommendations, designed to reduce exposure to domain-irrelevant information and record exposure where it occurs.[120] The NIST Report on latent fingerprints was primarily concerned with threats posed by bias and other human factors such as vision. The full title of the report is Latent Print Examination and Human Factors: Improving the Practice through a Systems Approach.[121] More recently, the National Commission on Forensic Science made the following recommendations:

1. FSSPs [forensic science service providers] should rely solely on task-relevant information when performing forensic analyses.

2. The standards and guidelines for forensic practice being developed by the Organization of Scientific Area Committees (OSAC) should specify what types of information are task- relevant and task-irrelevant for common forensic tasks.

3. Forensic laboratories should take appropriate steps to avoid exposing analysts to task- irrelevant information through the use of context management procedures detailed in written policies and protocols.[122]

Dangers posed by human factors are neither marginal nor remote and easily resisted by forensic practitioners. Human factors and the risks they introduce are central to recent reviews of the forensic sciences and recommendations for reform. The Revised Certificate does not refer to the dangers, it does not document the information provided or available to the examiner, or whether the ‘Verification’ process was blind or suggestive.

This omission is not only intentional, it ignores the fact that in response to the NAS Report and NIST Report recommendations, the FBI has refined the way it applies ACE-V. The FBI has adopted ‘linear ACE-V’, a ‘procedure [that] involves temporary masking of reference prints while analysts make and record their initial assessments of the evidentiary prints’.[123] So-called linear ACE-V involves the analyst proceeding through ‘Analysis’ and ‘Comparison’ in sequence and ‘blind’.[124] The examiner should make an assessment of sufficiency and mark the meaningful features of the unknown or latent print before undertaking a comparison with the known print. This marking should be documented and recorded at the time.[125] Latent fingerprint examiners in the pre-eminent American investigative bureau have revised the way they perform ACE-V in response to emerging dangers. The fingerprint examiner in JP v DPP, in contrast, appears to have engaged in analysis and comparison simultaneously. The NSW Police have not revised their procedures to avoid these notorious dangers and there are no references to human factors, alternative procedures, or dangers in the Revised Certificate.

Rather than require forensic practitioners to guard against risks, the magistrate and judge in JP v DPP placed an expectation on the defendant to somehow demonstrate where and how the examiner’s ‘determination was tainted by ... bias or other incorrect assessment’.[126] Such an approach is unrealistic and inconsistent with the allocation of the burden of proof in criminal proceedings.[127] It is the responsibility of the Crown to eliminate doubts whether introduced through inadequate procedures or other sources. We should not expect impecunious defendants to persuade credulous judicial officers, or jurors, of the reality of these dangers or explain their impact on the probative value (or weight) of forensic science evidence.

Verification is the independent analysis, comparison and evaluation of the friction ridge detail carried out by another qualified fingerprint examiner. In the NSW Police Force Forensic Services Group, the verification step is undertaken by a Verification Expert, who is a senior, practicing fingerprint expert appointed to that role based on their skills, knowledge, training and experience in fingerprint analysis.[128]

Reliance is placed on ‘Verification’ in the Revised Certificate. Verification is presented as a form of peer review that removes the ‘potential for error’. The Revised Certificate states that it is performed by a senior latent fingerprint examiner who purportedly undertakes a second and ‘independent analysis, comparison and evaluation’. We question whether verification reduces errors in the stages of analysis, comparison and evaluation. There is little evidence that verification, particularly where the reviewer is not blind, operates in the manner suggested.[129]

The first thing to note is that we are not provided with much detail about ‘Verification’. What does it involve? How are verification experts selected for particular cases? Is everything verified? What information is provided to the examiner engaged in verification? Does, for example, the verifying examiner know the original result? Is the verifying examiner provided with the original examiner’s work notes and conclusions or is the review conducted de novo? Does the fact of, or the need for, verification suggest that another examiner has identified a particular print? If the examiner performing verification knows the original result, even if only by implication, then in what sense can the verification be said to be independent? If the examiner is not provided with the original result, what blinding mechanisms are in place to avoid formal or informal suggestion?[130]

We know that verifiers do not begin the process anew. They rely on the prints and comparators selected by the original examiner. They are only asked to review prints that are said to match – ie, identified to a person of interest. So, the ‘verification expert’ comes to the process – regardless of what they are told and the materials provided to them – aware that another (possibly identified) examiner has already attributed a latent fingerprint to a specific person. The ‘verification expert’ reviews the fingerprints on this suggestive basis. There is no information available to the public about the frequency with which those engaged in verification disagree with original conclusions or question decisions around sufficiency or conclusiveness. The absence of information could be because latent fingerprint examiners do not make mistakes, but this is unlikely and inconsistent with the emerging scientific studies of latent fingerprint examiners in Australia and the US.[131] A more likely explanation is that the way verification is performed in NSW undermines its potential. Verification has not been evaluated. If mistakes or even disagreements are exposed through verification they are not routinely disclosed.[132]

The Revised Certificate’s reliance on accreditation and proficiency testing, to guarantee the method and approach, overstates the significance of compliance with the generic standards. Revealingly, there are no references to the Forensic Reporting Standard for the forensic sciences or the need for special caution with ‘common source opinions’.

The only standard referenced in the Revised Certificate is the International Standard entitled General Requirements for the Competence of Testing and Calibration Laboratories (‘International Standard’), which does not support the validity and reliability of practices undertaken by latent fingerprint examiners.[133] Rather, and in slightly simplified terms, the International Standard is of a general nature and intended to confirm that practices in scientific laboratories are basically consistent with written procedures. The Standard and its assessment by the National Association of Testing Authorities (‘NATA’) involves a paper audit of practices, performed by industry insiders.[134] Given that there are relatively few detailed protocols for ACE-V, many aspects of fingerprint analysis, comparison and evaluation are not standardised, and conformity with the Standard reveals little about the probative value of the evidentiary products.[135] Of significance, there are few specific requirements for reports to be compliant with the Standard. Moreover, we should not overlook the fact that NATA, the organisation responsible for accrediting the NSW Police Forensic Services Group in accordance with the Standard, approved the issuing of certificates and reports – like the one relied upon in JP v DPP – prior to the start of validation research in 2009 and continuing to 2017.

Accreditation is generally highly desirable, but only where the underlying standards and practices, informing accreditation, are valid and reliable. Accreditation against procedures that are not validated or not operationalised in ways that help to eliminate risks tends to be a ‘whitewash’. Reference to accreditation in the Revised Certificate is used to suggest that procedures and reports are epistemologically robust when all that is being confirmed is that institutions are basically compliant with their own procedures. These may, as in the case of non-blind verification, be untested and not standardised.[136]

Similarly, the proficiency tests used by most Australian police services, for the forensic sciences, are supplied by commercial providers. Something of a misnomer, they do not represent a credible test of proficiency. They are not designed to identify limitations and errors or to improve methods.[137] While even weak proficiency tests might occasionally identify a problem, this is not how they are generally deployed. Rather, what they accomplish for the NSW Police Forensic Services Group is to satisfy a prerequisite for accreditation.[138]

The Revised Certificate does not refer to authoritative independent reviews such as the NAS Report, NIST Report and Fingerprint Inquiry Report. From our perspective, the omission of such prominent reports is not an omission that a group endeavouring to impartially serve the courts would make.

Over the last two decades there have been a number of high profile reviews by the FBI, NAS, NIST, PCAST and Sir Anthony Campbell, following notorious mistakes in the US and UK.[139] There have also been a large number of critical papers and commentaries by attentive scholars from a variety of disciplinary backgrounds.[140] These are materials that would assist a lawyer or scientist to assess the probative value of latent fingerprint evidence.[141] They explain procedures as well as outline deficiencies and limitations. They discuss relevant research, missing research and place concerns about methodological limitations and managing human factors in context.

Before moving to consider some of the broader implications of our review, we want to respond to the contention that the oral testimony rectified the inadequate report in JP v DPP. Without descending into detail, our reading of the trial transcript casts doubts on both the adequacy of the testimony of the Crown’s fingerprint expert and the ability of that testimony to repair the shortcomings in the certificate or substantially answer the issues raised during cross-examination. To the extent that the Revised Certificate replicates some of the limitations evident in the original certificate, the same issue arises. The suggestion that answers supplied during oral testimony somehow addressed the kinds of issues raised in this article trivialises the deeply destabilising nature of these fundamental scientific oversights. Notwithstanding inconsistent judicial conclusions, the subjects raised in this article are precisely the kinds of factors that ‘materially [affect]’ the weight of the examiner’s ‘opinion that the fingerprints were identical’.[142]

It is not our intention to suggest that this fingerprint evidence is, or should be, inadmissible. Nor do we contend that the identification in JP v DPP was necessarily mistaken. Rather, as we explained in the previous section, our concerns are primarily with transparency, methodological rigour, legitimate forms of expression and the provision of means to appropriately evaluate or weigh the evidence. There are serious and unanswered limitations with the ‘method’, standards and consistency, categorical expression (ie, individualisation), vulnerability to bias and so on. While it is difficult to imagine a trial judge excluding fingerprint evidence in jurisdictions insensitive to validity and reliability, obviously there are serious problems with the over-claiming associated with individualisation and the non-engagement with scientific research, limitations and error.

Neither the magistrate nor the judge seem to have recognised the magnitude of the epistemic issues raised by the defence. Difficulties experienced by judicial officers would seem to be difficulties that might confront jurors where they are presented with latent fingerprint evidence. Problems identified by authoritative scientific reviewers, explored with varying degrees of insight and clarity during the trial, were not credibly addressed by the magistrate or the judge. The magistrate’s limited response to the admissibility challenge is reproduced below:

In this matter I have oral and written evidence from [the examiner]. His evidence was unshaken on his view as to the matching of the thumbprint of [JP]. In my view I disagree with the submissions in this matter, he has given sufficient evidence in these proceedings as to how he reached that determination. As an expert his expertise was not shaken, his opinion was not shaken. He is tasked, as he said, purely to compare W3 to [JP’s] prints. There is clearly in terms of the procedures involved, checks and balances in place. He acknowledged he is aware of case studies where potential impacts and bias of proceedings have occurred. His view as the expert in the field or presented as the expert in this matter is that where the appropriate procedures have taken place, is unlikely to have those errors occur. He also conceded that he had not read a lot of the literature referred to by Ms Graham in the cross-examination. Again he maintained his view that if protocol was followed properly it should not involve bias or incorrect assessment.

The difficulty of course with a lot of material that was cross-examined on is there is no method, no chance to actually test the validity of those arguments.

...

I have no evidence before this Court of the method used in this instance by [the examiner] ... [not transcribable] ... helping assist in his determination was tainted by the bias or other incorrect assessment by not following the protocols. I have no expert evidence on the defence showing in this particular matter that the thumbprint is not or could not be the accused’s. I say that of course there remains at all times the prosecutions responsibility to prove the matter beyond reasonable doubt. It was suggested that [the examiner] was contradictory or failed to make proper concessions, I actually find to the contrary. He answered appropriately in all circumstances especially where the questions were extremely open-ended and hypothetical. He did not attempt in any way to make his evidence or his position any greater than what it should in terms of the protocols that were involved.

...

The evidence by [the examiner] in giving his opinion in determination has not been proved forensically challenged in this matter. There is no Court decisions making such material unacceptable. What has been raised and I accept is that perhaps it is unreliable. ... At best I have nothing else binding before me that would exclude the evidence of [the examiner]. I can only scrutinise it on the material before me specific to this case. I accept [the examiner’s] evidence in that regard.[143]

The magistrate treated defence concerns about methodological limitations, exaggerated expression and bias as hypothetical issues. Rather than require the Crown to demonstrate that its routine procedures protect examiners from scientifically notorious dangers, there is an expectation that the defence will identify actual mistakes and errors – implicitly through an expert witness. Defence concerns, built on authoritative scientific literature and recommendations, are dismissed because ‘there is no method, no chance to actually test the[ir] validity’. This response is unprincipled. Rather than require the Crown to credibly demonstrate that procedures routinely used to identify persons are valid and performed in ways that minimise known risks of error, the magistrate discounted the impact of questions and criticisms because he held that there was no chance to test the validity of the questions and criticisms and no alternative expert witness asserted that the fingerprint was not JP’s. The advice and recommendations of independent scientists and modifications to practices in response to scientific advice around bias (such as linear ACE-V), by leading forensic science institutions (such as the FBI) might be thought to disrupt the dismissive attitude toward the defence’s concerns.

Consider also the superficial treatment of continuing problems with latent fingerprint evidence in the appeal judgment:

[w]hile a number of criticisms were made of [the examiner’s] evidence it was open to his Honour to conclude that there was no material to indicate that, to the extent the criticisms were sustained, they materially affected the weight to be attached to [the examiner’s] opinion that the fingerprints were identical. Otherwise his Honour had the distinct advantage of being able to observe [the examiner] give evidence and respond to criticism.[144]

In context, this summary is not merely unpersuasive, but surely mistaken. The criticisms and their sources must materially impact upon the weight of the opinion. The exaggerated form of the opinion (ie, positive identification), misrepresentation of the ‘method’ and its value, inattention to limitations, uncertainty and error, omission of and non-engagement with contextual bias and human factors, and the examiner’s reluctance or inability to make appropriate concessions must reduce probative value and weight.[145] Furthermore, the ability to observe the examiner’s demeanour and responses to questions did not provide the magistrate with any significant advantage over those reading through the transcript or this article.[146] The impressions and beliefs of a latent fingerprint examiner, however experienced, do not overcome scientific studies or their absence, insufficiently detailed protocols, and exaggerated abilities and conclusions.[147] The performative dimensions of the exchanges can hardly be a factor in addressing the probative value of the procedure or the propriety of categorical ‘common source attributions’. To the more technically proficient, the examiner’s performance appears naïve. Several responses in cross-examination were misguided, partial or misleading.[148] They were not informed by relevant ‘specialised knowledge’.

Nevertheless, this was law’s crude attempt to enable engagement with epistemic issues confronting fingerprint evidence emerging a century after Australian courts first began to rely upon it.[149] Decisions by the magistrate and judge privilege the status quo but without credible engagement with the validity and reliability of the evidence. Courts and latent fingerprint examiners seem very reluctant to engage in appropriate forms of re-calibration.

While the Revised Certificate does represent an improvement over the certificate relied on in JP v DPP and earlier reports, it does not constitute a sufficiently transparent or serious engagement with the broad range of issues and challenges confronting contemporary latent fingerprint examiners. It does not provide a clear indication of the known value of the conclusion or the means to assign one. In concluding, we intend to raise some of the policy implications. These include the system costs flowing from judicial accommodation and legal reliance on the adversarial process both to identify deficiencies in the evidence and, paradoxically, repair those deficiencies. Excusing routinely deficient certificates and reports is unlikely to encourage widespread institutional reform or scientific research. Many forensic practitioners look to the courts, rather than relevant scientific communities, for recognition and legitimacy. If a contested, inadequate and purportedly expert certificate or report can be repaired through cross-examination, how are those accused of criminal offences expected to make sensible pleas or tactical decisions ahead of trial?